Hyperparameter tuning using GridSearchCV

Hyperparameters are the types of parameters that are set before the learning process in a machine learning model. They act like knobs and switches that can be adjusted to different settings to improve the model's learning. Unlike regular parameters, hyperparameters cannot be learned by the model itself.

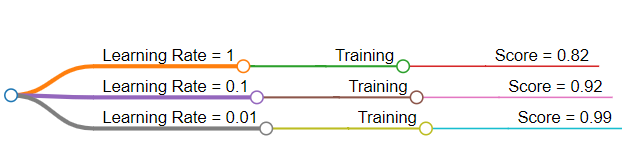

For example, the learning rate of a linear regression model determines how quickly it learns. If the learning rate is set too high, the model may learn quickly but make more mistakes. On the other hand, if the learning rate is set too low, the model may learn slowly but with fewer mistakes. The quality of the predictions made by the model is measured by the model's score, which can vary depending on the chosen hyperparameters.

During the training of a machine learning model, there can be multiple hyperparameters that affect the model's performance. These hyperparameters need to be tuned to find the best combination that yields the highest score.

Hyperparameter Tuning

To find the best set of hyperparameters, search techniques such as GridSearchCV and Random Search are commonly used.

GridSearchCV: CV stands for cross-validation. The data is divided into two segments: the training dataset and the test dataset. The training dataset is further divided into training and validation subsets.

Cross-validation involves using the validation subset during training to evaluate how the model performs on unseen data. Grid search utilizes this method to find the set of hyperparameters that provide the best validation score. It creates a grid of all possible values for the hyperparameters provided by the user and builds a machine learning model for each combination. Then, it evaluates the accuracy of each model using the validation score.

Example

In the given example, we have five possible values for Hyperparameter 1 and four possible values for Hyperparameter 2.

Hyperparameter 1 = [0.1, 0.2, 0.3, 0.4, 0.5]

Hyperparameter 2 = [0.1, 0.2, 0.3, 0.4]

There are a total of twenty validation scores in the grid, which means that twenty machine learning models need to be created and evaluated for all the hyperparameter combinations. Grid search is considered exhaustive and can take a significant amount of time to compute the best set of hyperparameters.

Scikit-learn example

Scikit-learn is a Python library for machine learning. This library provides a function for finding the best set of hyperparameters. Here's an example code:

from sklearn.model_selection import GridSearchCV, train_test_splitfrom sklearn.ensemble import GradientBoostingRegressorfrom sklearn.datasets import load_diabetesX,y = load_diabetes(return_X_y=True)X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.33, random_state=42)# Creating a Modelmodel = GradientBoostingRegressor()# Define the hyperparameter gridparam_grid = {'learning_rate': [0.2,0.02,0.02,1],'max_depth' : [2,4,6,8,10]}# Create the GridSearchCV objectgrid_search = GridSearchCV(estimator=model, param_grid=param_grid, cv=5, n_jobs = -1)# Perform the grid searchgrid_search.fit(X_train, y_train)# Access the best hyperparameters and best scorebest_learning_rate = grid_search.best_params_['learning_rate']best_max_depth = grid_search.best_params_['max_depth']best_score = grid_search.best_score_# Print the resultsprint("Best learning rate:", best_learning_rate)print("Best Depth:", best_max_depth)print("Best score (MSE):", best_score)

Explanation

Lines 1–3: We are importing the necessary libraries:

GridSearchCVandtrain_test_splitfromsklearn.model_selection,GradientBoostingRegressorfromsklearn.ensembleload_diabetesfromsklearn.datasets.

Lines 5–6: The

load_diabetesfunction is used to load the diabetes dataset, which contains input features (X) and target values (y). Thereturn_X_y=Trueargument ensures that the function returns the data in separate X and y arrays.train_test_splitis used to split the data into training and testing sets. Here, 33% of the data is reserved for testing (test_size=0.33), and the random_state is set to 42 for reproducibility.Line 9: An instance of the

GradientBoostingRegressorclass is created without specifying any hyper-parameters. This will be later tuned using grid search.Lines 12–14: The

param_griddictionary defines the values to be searched for the hyperparameters of the model.Line 17: The

GridSearchCVobject is created with the arguments:estimator: The model object on which grid search will be performed (in this case, the gradient boosting regressor model)param_grid: The dictionary specifying the hyperparameter grid.cv: The number of cross-validation folds (here, 5-fold cross-validation is used).n_jobs: The number of parallel jobs to run (-1 indicates using all available processors).

Line 21: The

fitmethod is called on thegrid_searchobject with the training data (X_train and y_train).Lines 24–26: The best hyperparameters found by grid search are accessed through the

best_params_attribute of thegrid_searchobject. The best score (mean squared error, MSE) achieved by the model is accessed through thebest_score_attribute of thegrid_searchobject.Lines 29–31: The best learning rate, best max depth, and best score are printed to the console.

Free Resources