Optuna vs. Hyperopt in Python

The hyperparameter optimization process selects the ideal combination of hyperparameter values to get the best performance out of the data in the shortest amount of time. A machine learning algorithm's ability to anticipate accurate outcomes depends on this process.

Therefore, the most challenging aspect of developing machine learning models is hyperparameter optimization.

We will look at two hyperparameter optimization, Optuna and Hyperopt, in Python. We will briefly describe and talk about their features and then compare them.

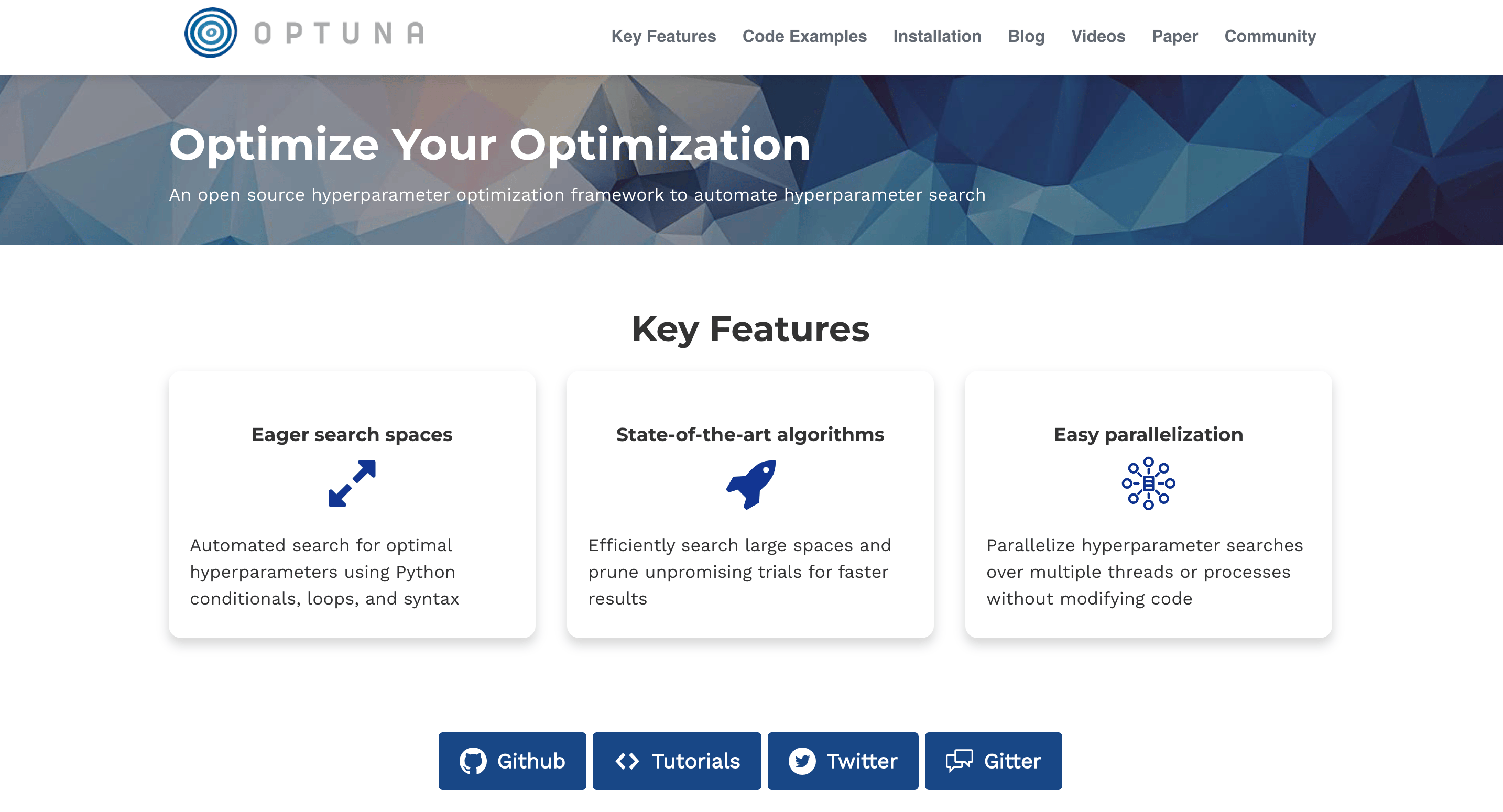

Optuna

An open-source Python framework for hyperparameter optimization is Optuna, which automates the search space for hyperparameters using the Bayesian approach. A Japanese AI business known as Preferred Network created this framework.

Optuna features

Optimization methods (Samplers): Optuna offers a variety of approaches for carrying out the hyperparameter optimization procedure. The most popular techniques are;

CmaEsSampler https://optuna.readthedocs.io/en/v2.10.1/reference/generated/optuna.samplers.CmaEsSampler.html RandomSampler https://optuna.readthedocs.io/en/v2.10.1/reference/generated/optuna.samplers.RandomSampler.html GridSampler https://optuna.readthedocs.io/en/v2.10.1/reference/generated/optuna.samplers.GridSampler.html TPESampler https://optuna.readthedocs.io/en/v2.10.1/reference/generated/optuna.samplers.TPESampler.html Visualization: Different techniques are offered by Optuna's visualization module to provide figures for the optimization result. These techniques assist in learning about the interplay between factors and how to proceed. Here are a few techniques we can employ;

plot_contour(),plot_intermidiate_values(),plot_optimization_history(),plot_edf(),plot_param_importances().Objective Function: The idea behind the

hyperoptandscikit-optimizemethods is similar to the idea for the objective function. And with Optuna, we can define the search space and objective in a single function, which is the sole distinction.Study: A study corresponds to a task requiring optimization (a set of trials). Create a study object, send the objective function to the method, and set the number of trials to begin the optimization process. We can decide whether to maximize or decrease your objective function using the

create_study()method. This is one of the essential elements of optuna that I appreciate because it gives us the option to decide how the optimization process will proceed.Search Spaces: Optuna provides numerous options for every sort of hyperparameter. The following are the choices that are made most frequently; Categorical parameters that use the

trials.suggest_categorical()method, Integer parameters that use thetrials.suggest_int()method, Float parameters that use thetrials.suggest_float()method, Continuous parameters, which use thetrials.suggest_uniform()method, Discrete parameters which use thetrials.suggest_discrete_uniform()method.

Hyperopt

James Bergstra created the potent Python module known as Hyperopt for hyperparameter optimization. When tweaking parameters for a model, Hyperopt employs a type of Bayesian optimization that enables us to obtain the ideal values. It has the ability to perform extensive model optimization with hundreds of parameters.

Hyperopt features

To perform our first optimization, we must be familiar with four key features of Hyperopt.

Search space: There are various functions available in Hyperopt that provide input parameter ranges; they are random search spaces. The most typical selections for a search space are;

hp.uniform(label, low, high),hp.choice(label, options),hp.randint(label, upper).fmin: The

fminfunction is an optimization function that iteratively cycles through various combinations of techniques and related hyperparameters to minimize the objective function.Trial object: All hyperparameters, loss, and other data are stored in the trials object, so we may retrieve them after running optimization. Additionally, trials can assist us in saving crucial information for later loading and restarting the optimization process.

Objective function: This minimizer function takes the values of the hyperparameters as input from the search space and returns the loss. This indicates that during the optimization process, we train the model using chosen hyperparameter values to forecast the target feature, evaluate the prediction error, and then feed it back to the optimizer. The optimizer will choose the values to re-iterate and check.

Optuna vs. Hyperopt

This section will compare the two hyperparameter optimization using some evaluation criteria.

Optuna vs Hyperopt table

Evaluation criteria | Optuna | Hyperopt |

Ease of use | It has a simpler implementation and uses processes as compared to Hyperopt. | Hyperropt is also easy to use but not easy to use as Optuna. |

Callbacks | Optuna makes it really easy with the callbacks argument. | In Hyperopt we have to modify the objective. |

Run Pruning | Optuna gives us the ability to perform pruning callbacks with many frameworks supported, such as

| Hyperopt doesn't have this ability. |

Handling exceptions | The .optimize() method in Optuna allows us to pass the permitted exceptions. | This is not available in Hyperopt as it is only supported by Optuna. |

Documentation | Optuna documentation is really good and well maintained, explaining all the basic concepts and shows us where to find more information. | The documentation is alright, but but it has missing API references, docstrings themselves are missing for most of methods/functions, and links to 404 in the docs. |

Visualizations | The visualizations in Optuna are incredible, few of these visualizations are available in the optuna.visualization module. | The hyperopt.plotting module includes some basic visualization utilities and three visualization functions, however they are not really helpful. |

Speed | It is very fast and easy to execute the distributed hyperparameter optimization on a single system or a cluster of machines. | Works as we would expect it to, but not as quick as Optuna. |

Conclusion

Both libraries perform admirably, but Optuna is slightly better in terms of performance—due to its flexibility, imperative approach to sample settings, and a modest reduction in boilerplate.

Free Resources