The Kubernetes architecture simplified

Let’s understand the Kubernetes architecture in an easy way…

Kubernetes is a production-grade container orchestration that manages automated container deployment, scaling, and management.

To learn about any tool or technology, you must start with learning about its terminology and architecture. Real-world applications, tools, and architecture are complex in nature; so, the goal of this blog post is to understand and learn it in an easy way.

High-level overview

The purpose of Kubernetes is to host your application, in the form of containers, in an automated fashion so that you can easily deploy, scale-in and scale-out (as required), and enable communications between different services inside your application.

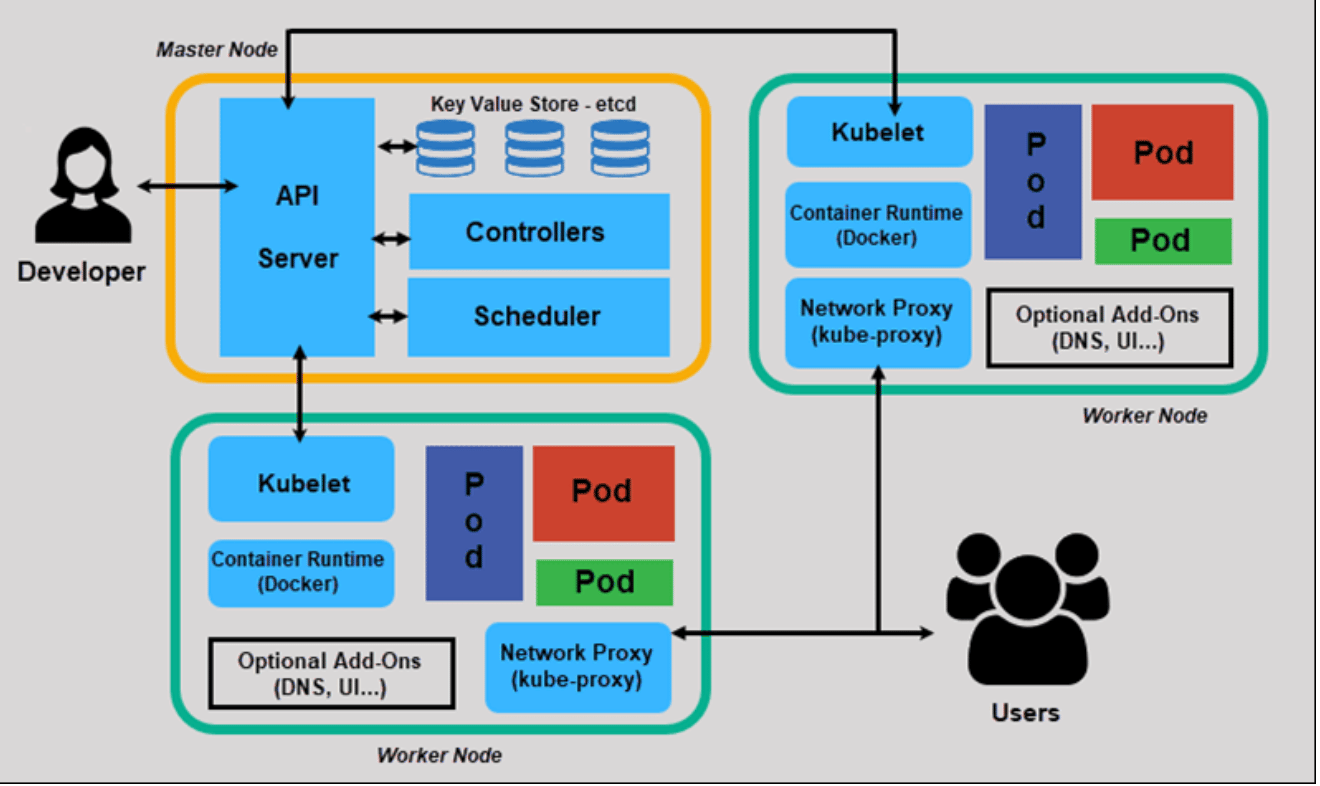

Kubernetes follows the distributed systems paradigm where a cluster (i.e., collection of nodes) appears to its users as one giant machine. With the distributed system there comes the concept of master-slave. One or more nodes become master as well as slaves, respectively. The master node is the one that coordinates various activities and supervises the entire cluster of nodes. Kubernetes architecture works the same as there will be one or more master and zero or more worker nodes/nodes.

In the initial days, the worker node was called a “minion.” It is similar to you wanting to achieve or complete a project, and then wanting to divide the project into multiple modules with at least one manager managing and monitoring the progress. Other team members can then work on those individual modules as they work towards completing the project.

No matter if you have a single node cluster or a thousand machine clusters, deploying an application is the same. The specific nodes where your application resides shouldn’t matter because they operate in the same fashion.

Kubernetes (K8s) is an open-source system for automated deployment, scaling, and management of containerized applications.

Most Cloud Native applications follow the 12-factor app, and the Kubernetes architecture is no exception.

Kubernetes Architecture

The master node is responsible for managing an entire cluster. It monitors the health check of all the nodes in the cluster, stores members’ information regarding different nodes, plans the containers that are scheduled to certain worker nodes, monitors containers and nodes, etc. So, when a worker node fails, the master moves the workload from the failed node to another healthy worker node.

The Kubernetes master is responsible for scheduling, provisioning, configuring, and exposing APIs to the client. So, all these are done by a master node using control plane components. Kubernetes takes care of service discovery, scaling, load balancing, self-healing, leader election, etc. Therefore, developers no longer have to build these services inside their applications.

Four basic components of the master node (control plane):

- API server

- Scheduler

- Controller manager

- Etcd

The API server is a centralized component where all the cluster components communicate. Scheduler, controller manager, and other worker node components communicate with the API server. Scheduler and controller manager request information from the API server before taking any action. This API server exposes the Kubernetes API.

The scheduler is responsible for assigning your application to the worker nodes. It will automatically detect which pod should be placed on which node based on resource requirements, hardware constraints, and other factors. It will smartly find out the optimum node that fulfills the requirements to run the application.

The controller manager maintains the cluster. It handles node failures, replicates components, maintains the correct number of pods, etc. It constantly tries to keep the system in the desired state by comparing it with the current state of the system.

Etcd is a data store that stores the cluster configuration. It is recommended that you have a backup as it is the source of truth for your cluster. If anything were to happen, you can restore all the cluster components from this stored cluster configuration. Etcd is a distributed reliable, key-value store; all the configurations are stored in documents, and it’s schema-less.

The worker node is nothing but a virtual machine (VM) running in the cloud or on-prem (a physical server running inside your data center). So, any hardware capable of running container runtime can become a worker node. These nodes expose underlying compute, storage, and networking to the applications. They do the heavy-lifting for the application running inside the Kubernetes cluster. Together, these nodes form a cluster – a workload assign is run to them by the master node component, similar to how a manager would assign a task to a team member. This way, we will be able to achieve fault-tolerance and replication.

There are three basic components of the worker node (data plane):

- Kubelet

- Kube-proxy

- Container runtime

The kubelet runs and manages the containers on a node and talks to the API Server. The scheduler will update the spec.NodeName with the respective worker node names, the kubelet controller will get a notification from the API server, and it will contact the container runtime (like Docker) to go out and pull the images that are required to run the pod.

The kube-proxy load balances traffic between application components. It is also called a service proxy that runs on each node in the Kubernetes cluster. It will constantly look for new services and appropriately create rules on each node to forward traffic to services and to the backend pods, respectively.

Container runtime runs containers like Docker, rkt, or containerd. Once you have the specification that describes the image for your application, the container runtime will pull the images and run the containers.

Pods are the smallest unit of deployment in Kubernetes just as a container is the smallest unit of deployment in Docker. To understand in an easy way, we can say that pods are nothing but lightweight VMs in the virtual world. Each pod consists of one or more containers. Pods are ephemeral in nature as they come and go, while containers are stateless in nature. Usually, we run a single container inside a pod. There are some scenarios where we will run multiple containers that are dependent on each other inside a single pod. Each time a pod spins up, it gets a new IP address with a virtual IP range assigned by the pod networking solution.

kubectl is a command-line utility through which we can communicate with or instruct the Kubernetes cluster to carry out a certain task. With this, we can control the Kubernetes cluster manager. There are two ways we can instruct the API server to create/update/delete resources in Kubernetes cluster: the imperative way, and the declarative way. If you are just getting started, then you can begin with the imperative way; but, in a production scenario, the best practice is to use the declarative way. Behind the scenes, kubectl translates your imperative command into a declarative Kubernetes Deployment object.

Key design principles

- Scale-in and scale-out workload

- High availability

- Self-healing

- Portability

- Security

There are other objects in Kubernetes such as Deployments, ReplicaSet, Services, DaemonSet, StatefulSet, etc.

Together, the above Kubernetes components create the architectural terminology. These core components work seamlessly together to provide the best user experience. In this fashion, Kubernetes’ architecture makes it modular and scalable by creating an abstraction between the application and underlying infrastructure.

Free Resources

- undefined by undefined