How to set up a Spark environment

Apache Spark is a unified analytics engine for large-scale data processing. This article will help you install Spark and set up Jupyter Notebooks in your Linux/Mac environment.

Prerequisites

-

Java

Please make sure you have Java 8 or above installed. -

Python

Please make sure you have Python 3 installed.

Install Spark

Download Spark, select:

- The latest Spark release

- A pre-built package for Apache Hadoop and download directly.

Unzip and move it to your favorite place:

tar -xzf spark-2.4.5-bin-hadoop2.7.tgz

mv spark-2.4.5-bin-hadoop2.7 /opt/spark-2.4.5

Then create a symbolic link:

ln -s /opt/spark-2.4.5 /opt/spark

This way, you will be able to download and use different versions of Spark.

Then you need to tell your system where to find Spark by editing ~/.bashrc (or ~/.zshrc):

export SPARK_HOME=/opt/spark

export PATH=$SPARK_HOME/bin:$PATH

Restart your terminal and you should be able to start PySpark now:

pyspark

If everything goes smoothly, you should see something like this:

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 2.4.5

/_/

Using Python version 3.7.7 (default, Mar 10 2020 15:43:33)

SparkSession available as 'spark'.

>>>

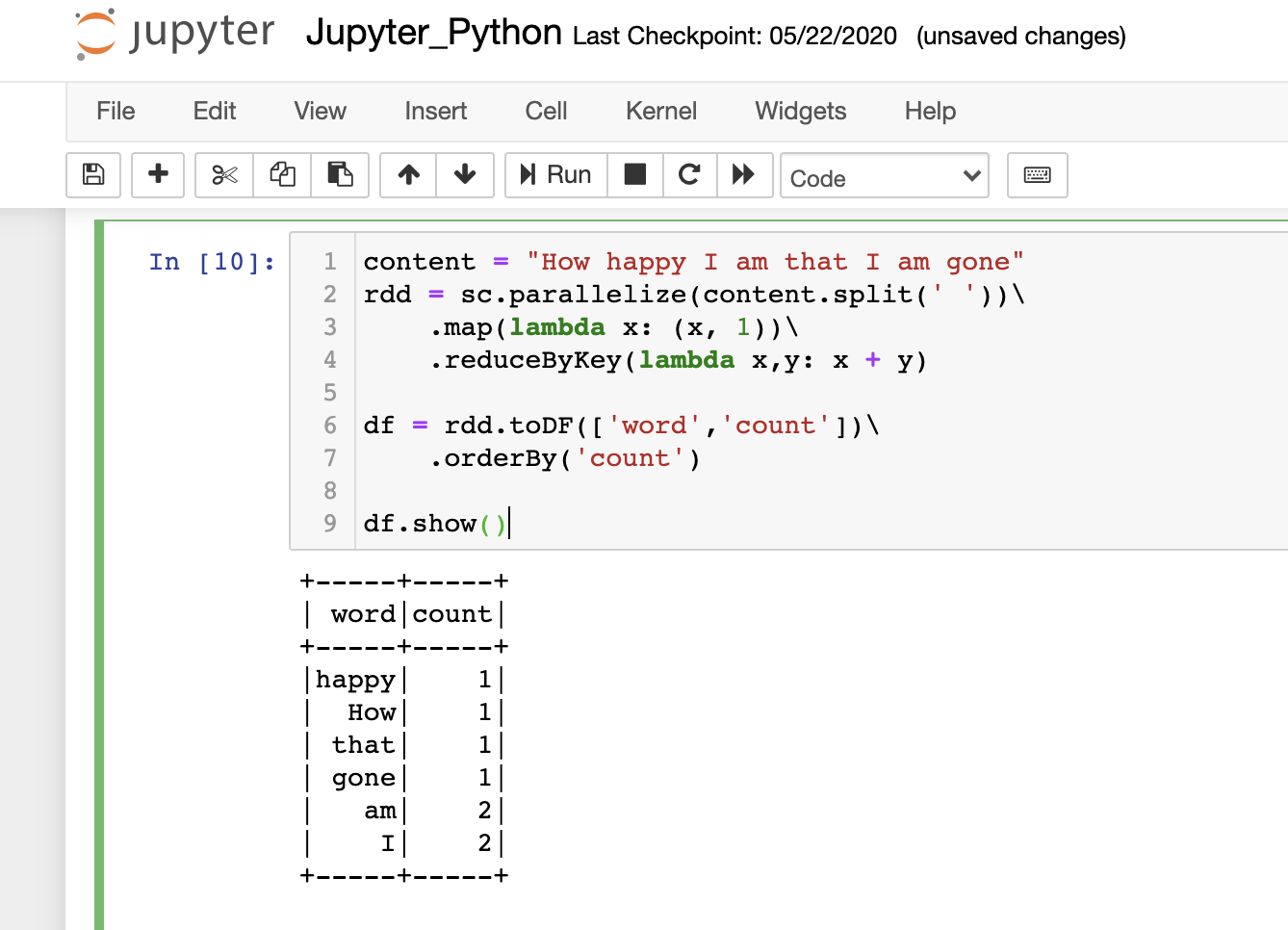

Let’s try something interesting. This is a mini script that counts the words in a string. Dont worry about the syntax for now.

content = "How happy I am that I am gone"

rdd = sc.parallelize(content.split(' '))\

.map(lambda x: (x, 1))\

.reduceByKey(lambda x,y: x + y)

rdd.toDF(['word','count'])\

.orderBy('count')\

.show()

The result will be:

+-----+-----+

| word|count|

+-----+-----+

| that| 1|

| How| 1|

| gone| 1|

|happy| 1|

| am| 2|

| I| 2|

+-----+-----+

Set up Jupyter Notebook (optional)

Jupyter Notebook is a popular web application that allows you to create documents containing live code and visualizations of the running results.

Just run,

pip install jupyter

and set up the below environment variables in your ~/.bashrc (or ~/.zshrc):

export PYSPARK_DRIVER_PYTHON=jupyter

export PYSPARK_DRIVER_PYTHON_OPTS='notebook'

Remember to restart your terminal and launch PySpark again:

pyspark

This should start a new Jupyter Notebook in your web browser. Create a new notebook by clicking on New -> Python 3.

Let’s copy and paste our previous code and run it by pressing Shift+Enter or Ctrl+Enter: