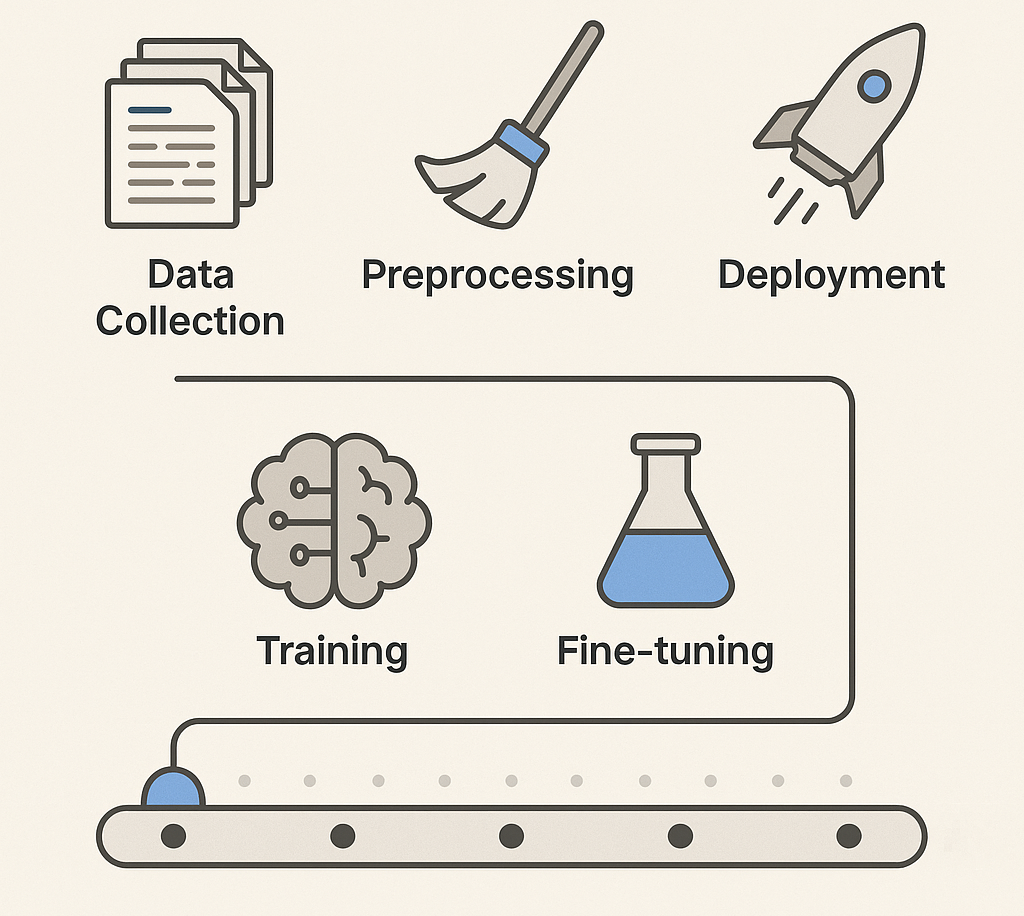

Large Language Models (LLMs) like GPT-4, Claude, and Gemini have become the engines behind AI-powered tools, chatbots, and intelligent agents. But how do these models actually learn to understand and generate language? What happens behind the scenes before they can write code, summarize documents, or tutor students?

In this blog, we’ll explain how LLMs are trained step by step and what developers should know about this complex, resource-intensive process.

Essentials of Large Language Models: A Beginner’s Journey

In this course, you will learn how large language models work, what they are capable of, and where they are best applied. You will start with an introduction to LLM fundamentals, covering core components, basic architecture, model types, capabilities, limitations, and ethical considerations. You will then explore the inference and training journeys of LLMs. This includes how text is processed through tokenization, embeddings, positional encodings, and attention to produce outputs, as well as how models are trained for next-token prediction at scale. Finally, you will learn how to build with LLMs using a developer-focused toolkit. Topics include prompting, embeddings for semantic search, retrieval-augmented generation (RAG), tool and function calling, evaluation, and production considerations. By the end of this course, you will understand how LLMs actually work and apply them effectively in language-focused applications.

Step 1: Curating massive datasets#

Training starts with data. Lots of it. LLMs are trained on massive corpora of text scraped from the internet, including:

Books, encyclopedias, and academic papers

News articles and blog posts

Code repositories like GitHub

Wikipedia and Common Crawl

Public forums, FAQs, technical documentation, and more

These sources are selected to expose the model to a wide variety of vocabulary, sentence structures, and knowledge domains. Engineers use automated pipelines to clean the data, removing profanity, private information, or spammy content. For more specialized models, domain-specific datasets (e.g., biomedical literature or legal contracts) are added to fine-tune the model’s expertise.

The goal is to cover diverse language styles, domains, and reasoning patterns. To avoid bias and improve quality, engineers filter toxic, repetitive, or low-quality content using custom heuristics and classifiers and apply deduplication techniques to remove near-identical documents.

Step 2: Tokenization and preprocessing#

Before training can begin, raw text is converted into a format the model can process:

Text is broken into subword units called tokens using algorithms like Byte Pair Encoding (BPE) or SentencePiece

Tokens are mapped to numerical embeddings that capture semantic similarity

Long documents are chunked into model-sized sequences (e.g., 2,048 or 4,096 tokens)

Special tokens mark sentence boundaries, prompt types, and metadata

Tokenization allows the model to work with both common and rare words efficiently. Preprocessing also involves shuffling and batching data to balance topics and ensure convergence. For multilingual models, scripts and language-specific nuances are handled by adding language tokens and normalizing text forms.

This step ensures that text is uniformly encoded and structured for efficient model ingestion.

Step 3: Training with next-token prediction#

The core training objective is simple but powerful: predict the next token in a sequence given all previous ones.

A transformer-based architecture processes token embeddings through layers of self-attention and feedforward networks

The model updates its parameters using backpropagation and gradient descent

Training requires petaflop-scale compute, often across hundreds of GPUs or TPUs

Over time, the model internalizes grammar, factual knowledge, commonsense reasoning, and stylistic variation

Training is performed over multiple epochs using learning rate schedules like cosine decay or warm-up steps to stabilize convergence. The model gradually improves its ability to fill in the blanks, continue stories, and answer questions by learning dependencies and patterns across large contexts.

This approach is unsupervised and allows the model to generalize broadly across language tasks.

Step 4: Scaling up parameters and data#

Modern LLMs have billions to trillions of parameters, and their performance improves with scale:

More data improves generalization across domains

Larger models capture more abstract and compositional patterns

Techniques like data parallelism and model parallelism help distribute the workload

Optimization frameworks like DeepSpeed and Megatron-LM enhance throughput

Training such large models requires advanced memory management and gradient checkpointing. Mixed precision training is commonly used to speed up computations and reduce memory usage. Scaling laws have shown that increasing model size and training data leads to smoother loss curves and better few-shot performance, guiding future model development.

Scale introduces new challenges in stability, memory usage, and efficiency, requiring sophisticated engineering solutions.

Step 5: Alignment and instruction tuning#

Raw LLMs may output irrelevant, verbose, or even harmful text. To make them useful:

Instruction tuning uses supervised datasets with prompt-response pairs

Examples include summarization, code generation, translation, and classification

Datasets are curated from human-written prompts, chatbot logs, or synthetic data

Fine-tuning improves prompt adherence and task specificity

Alignment also involves training on ethical guidelines and user-centric objectives. Models are evaluated for helpfulness, honesty, and harmlessness. This stage introduces the ability to follow human intent more precisely, often using multi-turn dialogues, system messages, or chain-of-thought prompts for reasoning. The result is a model that better understands how to follow instructions and solve tasks as intended.

This stage transforms a general-purpose model into a more goal-directed assistant.

Step 6: Reinforcement learning from human feedback (RLHF)#

To better align models with human intent and ethics:

Annotators rank different model outputs for a given prompt

A reward model is trained to predict these rankings

Reinforcement learning (often PPO) adjusts the LLM to produce more preferred outputs

This step boosts safety, relevance, and politeness

RLHF is resource-intensive but essential for reliable conversational performance.

Step 7: Evaluation and benchmark testing#

LLMs are evaluated on academic and real-world benchmarks before deployment:

Tasks span reading comprehension, math, code, ethics, and logical reasoning

Examples include MMLU, ARC, Big-Bench, HumanEval, and HellaSwag

Evaluation includes perplexity, accuracy, BLEU scores, and human ratings

Red-teaming and beta testing uncover weaknesses and edge cases

Thorough evaluation ensures that models meet performance, safety, and usability goals.

Step 8: Continual learning and updates#

Language and facts evolve, and so do LLMs:

Fine-tuning on recent news or domain-specific datasets keeps models relevant

Retrieval-augmented generation (RAG) injects fresh knowledge without retraining

Agents or pipelines can update LLM outputs via feedback loops

Continual learning allows models to adapt over time without catastrophic forgetting.

Step 9: Safety and red-teaming#

Safety audits test how LLMs behave under stress or adversarial prompting:

Red teams craft prompts to elicit misinformation, bias, or unsafe behavior

Safety layers include toxicity classifiers, refusal heuristics, and guardrails

Outputs are evaluated against safety standards like AI risk frameworks

Developers retrain or filter based on these findings

This step is vital for enterprise trust, regulatory compliance, and user well-being.

Step 10: Compression and deployment#

Trained LLMs are often too large for practical use. Compression techniques include:

Quantization reduces precision (e.g., from FP32 to INT4) to shrink size and speed up inference

Pruning removes low-impact weights from the model

Knowledge distillation trains smaller models to mimic larger ones

These techniques allow deployment on CPUs, mobile devices, or serverless platforms.

Step 11: Multimodal expansion#

Next-gen LLMs go beyond text:

Visual-language models can describe images, generate diagrams, and answer visual questions

Audio-language models handle speech input/output

Multimodal transformers unify all modalities under one architecture

These models enable interactive agents, digital tutors, and accessibility features across platforms.

Step 12: Responsible data sourcing#

Ethical AI starts with ethical data:

Avoiding scraping of private or copyrighted material

Respecting robots.txt and terms of service

Offering opt-out options for content creators

Documenting dataset sources and filtering criteria

Transparency in data sourcing is key to model integrity and public trust.

Step 13: Open models and community fine-tuning#

Open-source LLMs are democratizing access:

Projects like LLaMA, Mistral, Falcon, and Mixtral release models under permissive licenses

Developers can fine-tune with LoRA, QLoRA, or full training

Communities curate instruction datasets like OpenAssistant or UltraChat

Leaderboards (e.g., Hugging Face) encourage iteration and benchmarking

Open models foster transparency, experimentation, and localization.

Wrapping up#

LLM training is a multi-stage process combining unsupervised learning, supervised fine-tuning, reinforcement feedback, and deployment engineering. From web-scale data to GPU clusters and safety layers, every stage plays a critical role in shaping model capabilities.

As open tools mature, more developers can participate in customizing or extending LLMs for their domains. Understanding how LLMs are trained isn’t just academic—it’s foundational to building trustworthy, performant, and future-ready AI systems.