What are the core components of LangChain?

LangChain is one of the most popular frameworks for building applications with large language models (LLMs). From intelligent chatbots to document summarizers and retrieval-based assistants, LangChain gives developers the building blocks they need to turn LLMs into functioning products.

But to use LangChain effectively, you need to understand its structure.

This blog will walk you through the core LangChain components, what they do, and how they work together to support powerful LLM workflows.

Why LangChain is modular by design#

Unlike monolithic libraries, LangChain is built around modular abstractions. This means you can pick and choose only the parts you need — ideal for scaling from small prototypes to production-grade systems.

Each of the core LangChain components plays a specific role: some wrap LLMs, others handle memory, tools, or data retrieval. You can combine them in flexible ways to match your app’s requirements.

This modularity also encourages experimentation. You can prototype quickly with just a chain and prompt, then incrementally add memory, tools, and agents as your application matures.

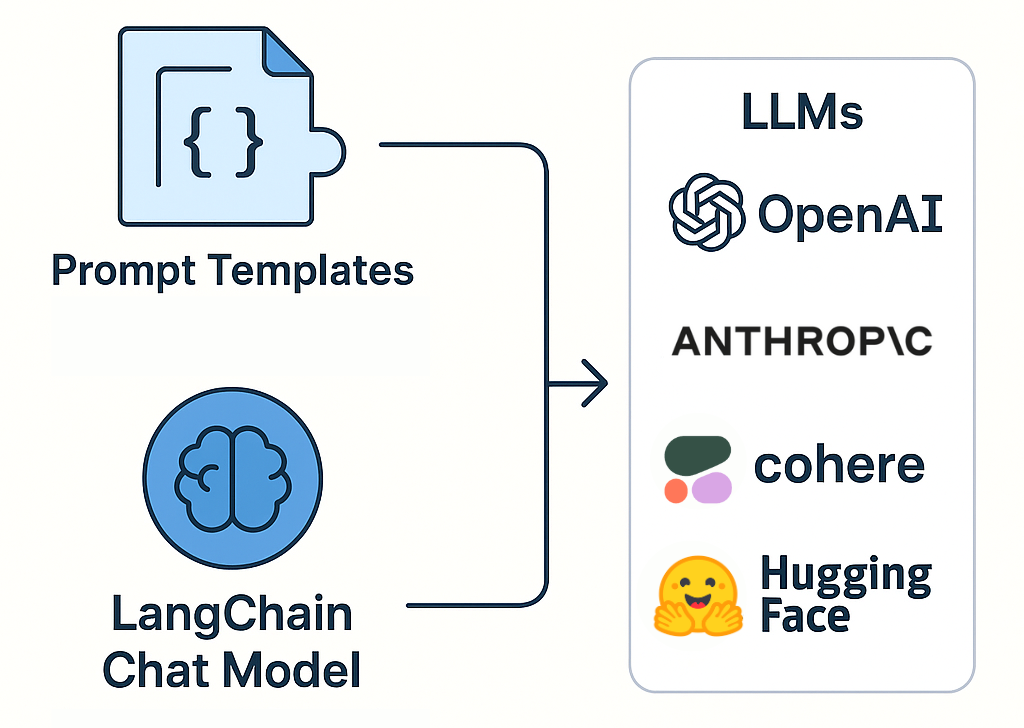

Models and prompt templates#

At the heart of LangChain are the models. These serve as the wrappers around LLM providers like OpenAI, Anthropic, Cohere, or Hugging Face.

LLMs: Abstractions for calling foundational models. LangChain standardizes how these models are accessed, which simplifies switching providers or supporting multiple ones.

Chat models: Designed for multi-turn conversations, these support chat-based flows and are useful for building assistants or support agents

Prompt templates: Help build reusable, parameterized prompts. You can use placeholders for dynamic input, making your prompts more adaptable and organized. LangChain also allows nesting templates, enabling more complex prompt generation.

These tools provide consistency, reusability, and reduce prompt errors (especially when scaling across teams).

Chains#

Chains are sequences of steps that automate workflows involving one or more LLM calls. They're one of the most central LangChain components, allowing you to compose logic easily.

LLMChain: Basic unit that connects a prompt to a model.

SequentialChain: Allows chaining multiple LLMChain components in a linear order.

RouterChain: Selects different chains dynamically based on input, which is useful for building multi-modal or multi-intent systems.

Chains abstract control flow logic, making it easier to manage and debug complex LLM pipelines.

Tools and agents#

You'll use tools and agents when you need your model to perform tasks beyond text generation, such as retrieving information or calling external APIs.

Tools: Functions exposed to the agent. Examples include calculators, search tools, or database queries. Tools can be prebuilt or user-defined and support input/output schemas for validation.

Agents: Decision-making LLMs that determine which tools to use and in what order. LangChain offers flexible agent architectures, including zero-shot and ReAct-style agents.

These components enable dynamic, goal-oriented workflows where models make decisions and take actions based on user inputs.

Memory#

Memory modules help LLMs remember previous interactions, making them ideal for conversations or tasks that require persistent context.

Buffer memory: Stores entire interaction histories, perfect for chatbot-like interfaces.

Summary memory: Reduces token load by summarizing conversation history.

Entity memory: Tracks references to specific entities mentioned by users, improving personalization.

LangChain’s memory architecture supports plug-and-play compatibility with chains and agents, enhancing interactivity and context retention.

Retrieval and vector stores#

Retrieval allows your app to augment LLM responses with external knowledge — an essential part of building RAG systems.

Retrievers: Accept queries and return the most relevant documents.

Vector stores: Pinecone, FAISS, Chroma, and others store document embeddings for fast semantic search.

Document loaders: Extract and chunk data from sources like PDFs, web pages, or databases.

LangChain also supports hybrid search strategies and metadata filtering, improving the precision and relevance of results.

Callbacks and tracing#

LangChain offers robust tracing to help you debug and analyze your pipelines.

Callbacks can be added to log each step’s execution time, input, and output.

Tools like LangSmith offer visual interfaces for reviewing trace logs, identifying bottlenecks, and monitoring performance.

This enables better transparency and observability in production systems, making it easier to debug and optimize workflows.

Output parsers and evaluation#

LangChain includes utilities to validate and structure LLM outputs:

Output parsers: Convert model output into formats like lists, JSON, or structured records.

Evaluation tools: Automatically assess generation quality, detect hallucinations, or score against reference outputs.

These utilities are essential for maintaining output quality in applications where structure and reliability are critical.

Document loaders and text splitters#

Loading and preprocessing content is critical for any RAG or document-based system.

Document loaders: Fetch data from files, cloud drives, databases, or APIs.

Text splitters: Chunk documents intelligently to preserve semantic meaning while optimizing for token limits.

LangChain offers multiple splitting strategies (recursive, character, token-based) to fit various embedding and retrieval needs.

Runnable interfaces#

LangChain’s Runnable abstraction makes chains and components callable, composable, and interoperable with orchestration tools.

Use RunnableSequence to string together steps.

RunnableLambda lets you inject custom functions.

Deploy with RunnableBind and RunnableMap to enable parallel and conditional logic.

This abstraction supports cloud-native deployment, reuse, and integration into CI/CD pipelines.

Integration with frameworks and platforms#

LangChain integrates smoothly with modern deployment and frontend stacks:

FastAPI / Flask: Turn LangChain apps into REST APIs.

LangServe: A drop-in way to serve chains via HTTP endpoints.

Streamlit / Gradio: Build interactive interfaces for testing or demos.

These integrations allow for fast iteration, testing, and deployment of LLM-powered features.

Caching and throttling#

Reduce costs and improve performance using LangChain’s caching layer.

In-memory caching: Ideal for quick testing and development.

Persistent caching with Redis: Suitable for production workloads.

Rate-limiting and retries: Prevent overloading third-party APIs and improve system resilience.

LangChain's caching options can be combined with tracing for analytics and optimization.

LangChain Hub#

LangChain Hub is a library of open-source, community-built chains and prompts.

Explore use cases like summarization, Q&A, or classification.

Fork existing chains to get a head start.

Share your own templates and learn from others.

LangChain Hub is especially useful for onboarding teams and bootstrapping new projects with tried-and-tested components.

Token and cost tracking#

LangChain includes built-in utilities to track:

Token consumption per step or chain.

Cost breakdowns based on provider pricing.

Cumulative usage across users or sessions.

This is crucial for budgeting, especially when deploying at scale. You can integrate these metrics with dashboards for ongoing cost and efficiency monitoring.

Recap#

Mastering the core LangChain components will help you build powerful, flexible, and scalable LLM applications. Whether you're building simple Q&A tools or complex agent-driven systems, LangChain’s modular design lets you grow from prototype to production with confidence.

Start with the basics — models, prompts, and chains —then gradually layer in memory, retrieval, tools, and agents. As your needs expand, LangChain’s deeper capabilities in tracing, evaluation, and integration will help you maintain speed, quality, and cost-efficiency.

By learning how each module fits into the broader architecture, you’ll be better equipped to build robust AI solutions that are transparent, reusable, and easy to scale.

Free Resources