An Introduction to Scaling Distributed Python Applications

Python is often dismissed when it comes to building scalable, distributed applications. The trick is knowing the right implementation and tools for writing Python distributed applications that scale horizontally.

With the right methods, technologies, and practices, you can make Python applications fast and able to grow in order to handle more work or requirements.

In this tutorial, we will introduce you to scaling in Python. We’ll learn the key things you need to know when building a scalable, distributed system in Python.

This guide at a glance:

- What is scaling?

- CPU scaling in Python

- Daemon Processes in Python

- Event loops and Asyncio in Python

- Next steps for your learning

Learn how to scale in Python

Learn how write Python applications that scale horizontally. You’ll cover everything from REST APIs, deployment to PaaS, and functional programming.

The Hacker’s Guide to Scaling in Python

What is scaling?#

Scalability is a somewhat vague term. A scalable system is able to grow to accommodate required growth, changes, or requirements. Scaling refers to the methods, technologies, and practices that allow an app to grow.

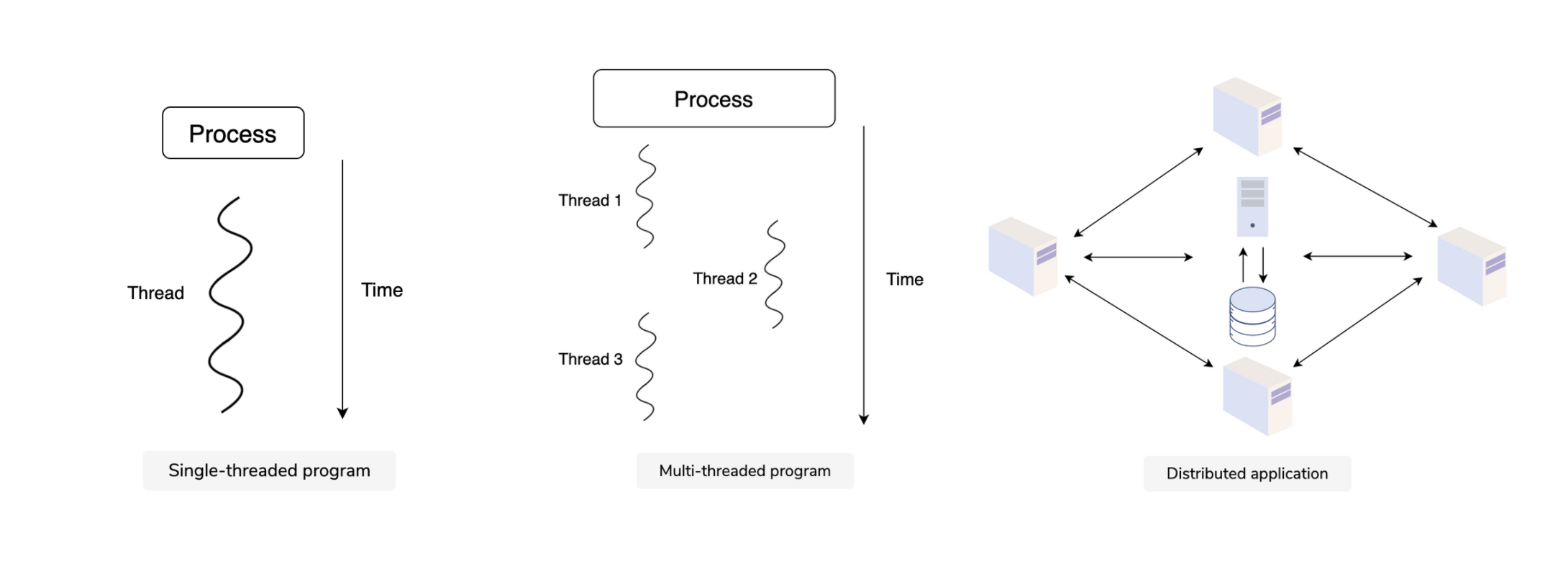

A key part of scaling is building distributed systems. This means that you distribute workload across multiple workers and with multiple processing units. Workers divide tasks across multiple processors or computers.

Spreading workload over multiple hosts makes it possible to achieve horizontal scalability, which is the ability to add more nodes. It also helps with fault tolerance. If a node fails, another can pick up the traffic.

Before we look at the methods of building scalable systems in Python, let’s go over the fundamental properties distributed systems.

Single-threaded application#

This is a type of system that implies no distribution. This is the simplest kind of application. However, they are limited by the power of using a single processor.

Multi-threaded application#

Most computers are equipped with this type of system. Multi-threading applications are more error-prone, but they offer few failure scenarios, as no network is involved.

Network distributed application#

This type of system is for applications that need to scale significantly. They are the most complicated applications to write, as they require a network.

Multithreading#

Scaling across processors is done with multithreading. This means we are running code in parallel with threads, which are contained in a single process. Code will run in parallel only if there is more than one CPU available. Multithreading involves many traps and issues, such as Python’s Global Interpreter Lock (GIL).

CPU scaling in Python#

Using multiple CPUs is one of the best options for scalability in Python. To do so, we must use concurrency and parallelism, which can be tricky to implement properly. Python offers two options for spreading your workload across multiple local CPUs: threads and processes.

Threads in Python#

Threads are a good way to run a function concurrently. If there are multiple CPUs available, threads can be scheduled on multiple processing units. Scheduling is determined by the operating system.

There is only one thread, the main, by default. This is the thread that runs your Python application. To start another thread, Python offers a threading module.

Once started, the main thread waits for the second thread to complete by calling its join method. But, if you do not join all your threads, it is possible that the main thread finishes before the other threads can join, and your program will appear to be blocked.

To prevent this, you can configure your threads as daemons. When a thread is a daemon, it is like a background thread and will be terminated once the main thread exits. Note that we don’t need to use the join method.

Understanding the GIL: when it hurts and when it doesn’t#

Python’s Global Interpreter Lock (GIL) allows only one thread to execute Python bytecode at a time in a single interpreter process. That sounds like the end of scaling in Python—but it isn’t:

CPU-bound Python code (pure Python loops, heavy math in Python) is throttled by the GIL. Use multiprocessing, C extensions (NumPy, Numba, Cython), or move hot paths to Rust/C++ when needed.

I/O-bound work (HTTP calls, DB queries, filesystem/network waits) releases the GIL during blocking operations, so threads or asyncio can improve throughput on one machine.

Many scientific/ML libraries release the GIL internally and leverage native code and multiple cores (BLAS, MKL). In those cases, multithreading can still scale CPU work.

Rule of thumb for scaling in Python:

CPU-bound? Prefer processes, vectorization, or native extensions.

I/O-bound? Prefer asyncio (single-threaded, many concurrent sockets) or thread pools.

Processes in Python#

Multithreading is not perfect for scalability due to the Global Interpreter Lock (GIL). We can also use processes instead of threads as an alternative. The multiprocessing package is a good, high-level option for processes. It provides an interface that starts new processes. Each process is a new, independent instance, so each process has its own independent global state.

When work can be parallelized for a certain amount of time, it’s better to use multiprocessing and fork jobs. This spreads the workload among several CPU cores.

We can also use multiprocessing.Pool, which is a multiprocessing library that provides a pool mechanism. With multiprocessing.Pool, we don’t need to manage the processes manually. It also make processes reusable.

Keep the learning going.#

Learn how to scale in Python without scrubbing through videos or documentation. Educative’s text-based courses are easy to skim and feature live coding environments - making learning quick and efficient.

Daemon Processes in Python#

As we learned, using multiple processes to schedule jobs is more efficient in Python. Another good option is using daemons, which are long-running, background processes that are responsible for scheduling tasks regularly or processing jobs from a queue.

We can use cotyledon, a Python library for building long-running processes. It can be leveraged to build long-running, background, job workers.

Below, we create a class named PrinterService to implement the method for cotyledon.Service: run. This contains the main loop and terminate. This library does most of its work behind scenes, such os.fork calls and setting up modes for daemons.

Cotyledonuses several threads internally. This is why thethreading.Eventobject is used to synchronize our run andterminatemethods.

Cotyledon runs a master process that is responsible for handling all its children. It then starts the two instances of PrinterService, and gives new process names so they’re easy to track. With Cotyledon, if one of the processes crashes, it is automatically relaunched.

Note:

Cotyledonalso offers features for reloading a program configuration or dynamically changing the number of workers for a class.

Event loops and Asyncio in Python#

An event loop is a type of control flow for a program where messages are pushed into a queue. The queue is then consumed by the event loop, dispatching them to appropriate functions.

A very simple event loop could like this in Python:

while True: message = get_message() if message == quit: break

process_message(message)

Asyncio is a new, state-of-the-art event loop provided in Python 3. Asyncio ) stands for asynchronous input output. It refers to a programming paradigm that achieves high concurrency using a single thread or event loop. This is a good alternative to multithreading for the following reasons:

- It’s difficult to write code that is thread safe. With asynchronous code, you know exactly where the code will shift between tasks.

- Threads consume a lot of data. With async code, all the code shares the same small stack and the stack.

- Threads are OS structures so they require more memory. This is not the case for ssynico.

Asyncio is based on the concept of event loops. When asyncio creates an event loop, the application registers the functions to call back when a specific event happens. This is a type of function called a coroutine. It works similar to a generator, as it gives back the control to a caller with a yield statement.

Above, the coroutine hello_world is defined as a function, but that the keyword used to start its definition is async def. This coroutine will print a message and returns a result. The event loop runs the coroutine and is terminated as when the coroutine returns.

I/O-bound throughput: asyncio + ASGI for web services#

For network-heavy APIs (chatty microservices, streaming, websockets), ASGI servers (e.g., Uvicorn, Hypercorn) paired with FastAPI/Starlette unlock high concurrency on a single node. Compared to WSGI stacks, ASGI embraces async/await end-to-end so your handlers don’t block the loop. Patterns that boost scaling in Python for I/O:

-

Connection pooling: Use async DB/HTTP clients (e.g., asyncpg, httpx) with bounded pools to avoid socket exhaustion.

-

Backpressure: Add timeouts, semaphores, and bounded queues (asyncio.Semaphore, anyio) to protect upstream services.

-

Batching: Combine many small requests into fewer larger ones (e.g., write-behind buffers, batched inserts).

-

Streaming: Prefer server/client streams for large payloads to avoid loading entire bodies in memory.

For classic WSGI apps (Flask/Django), scale with Gunicorn + workers and put a reverse proxy (Nginx/Envoy) in front. For new, highly concurrent APIs, ASGI + Uvicorn is often the simpler path.

Next steps for your learning#

Congrats on making it to the end! You should now have a good introduction to the tools we can use to scale in Python. We can leverage these tools to build distributed systems effectively. But there is still more to learn. Next, you’ll want to learn about:

- Run

coroutinecooperatively aiohttplibrary- Queue-based distribtuion

- Lock management

- Deploying on PaaS

To get started with these concepts, check out Educative’s comprehensive course The Hacker’s Guide to Scaling Python. You’ll cover everything from concurrency to queue-based distribution, lock management, and group memberships. At the end, you’ll get hands on building a REST API in Python and deploying an app to a PaaS.

By the end, you’ll be more productive with Python, and you’ll be able to write distributed applications.

Happy learning!