Deep Learning Trends: top 20 best uses of GPT-3 by OpenAI

This article was written by Aman Anand and was originally published on Dev.to. Aman is a data science and machine learning enthusiast. Today, he shares his knowledge of Natural Language Processing and deep learning technologies.

After watching a lot of buzz about GPT-3 on social media and how it will change the world, I decided to write a collection of some of the best uses of GPT-3 by OpenAI.

Generative Pre-trained Transformer 3 (GPT-3) is an autoregressive language model for creating human-like text with deep learning technologies.

GPT-3 is the next big thing for deep learning after Netscape Navigator, and it’s expected to change the world. I believe that GPT-3 will serve as a great helper to humankind in all fields, including software development, teaching, writing poetry, and even comprehending large volumes of text.

So, let’s take a walk through the streets of Twitter and learn about 20 amazing uses, inventions, and applications of GPT-3 that illustrate its immense potential. The original Tweets can be found below.

Take your career to the next level with Machine Learning#

These five, curated ML courses by Adaptilab will give you a valuable leg up on your journey.

Become a Machine Learning Engineer Path

1. Code Oracle#

Not only can GPT-3 generate code for you, but it can also comprehend the code written by you. Here is an example where GPT-3 explains the code written in Python in detail.

See the original post

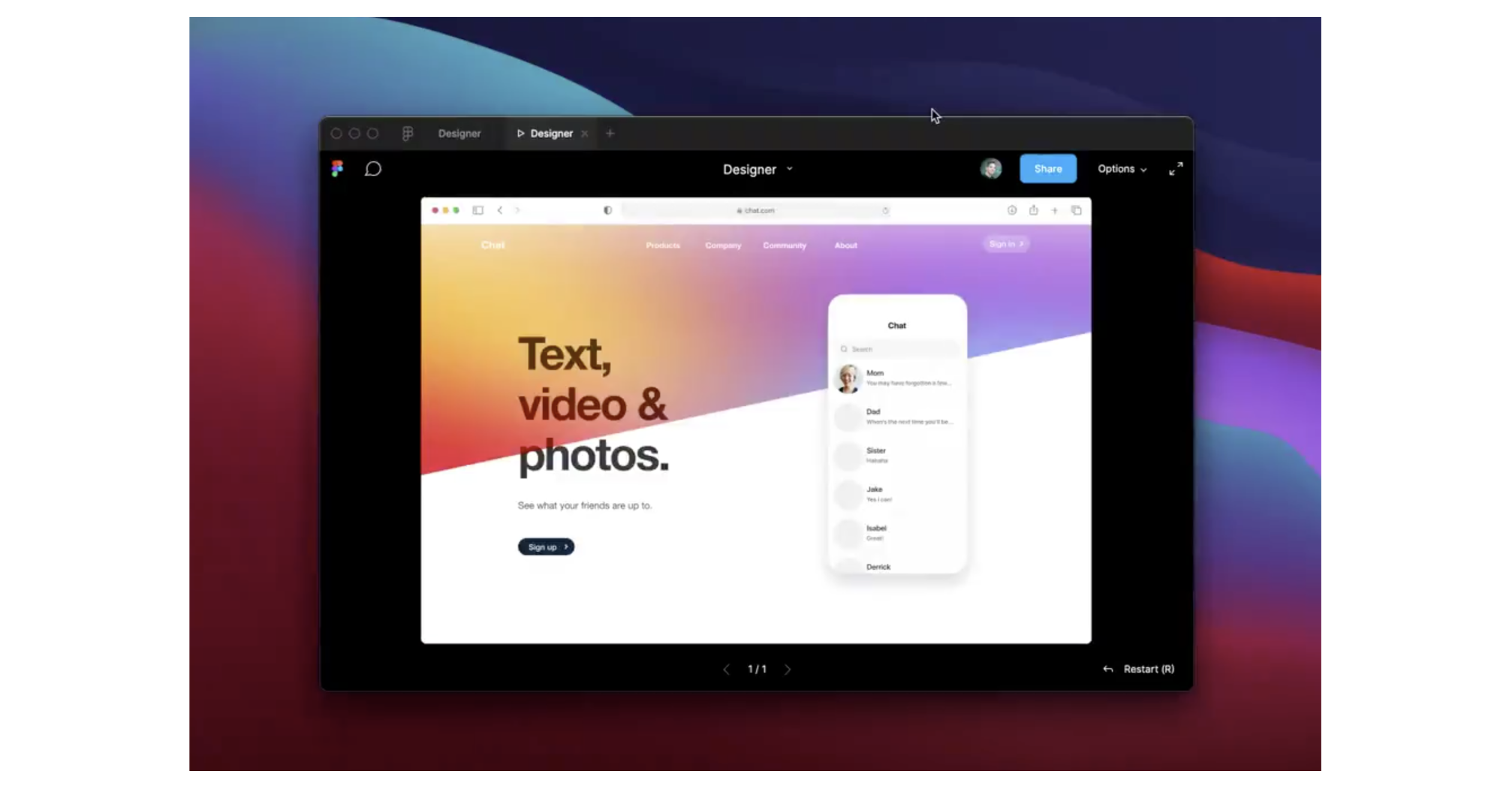

2. Designer#

Here is a beautiful integration of Figma plugin and GPT-3 to generate beautiful web templates. The developer inputs the following text:

“An app that has a navigation bar with a camera icon, “Photos” title, and a message icon. A feed of photos with each photo having a user icon, a photo, a heart icon, and a chat bubble icon”

And generates this beautiful, simple application.

See the original post

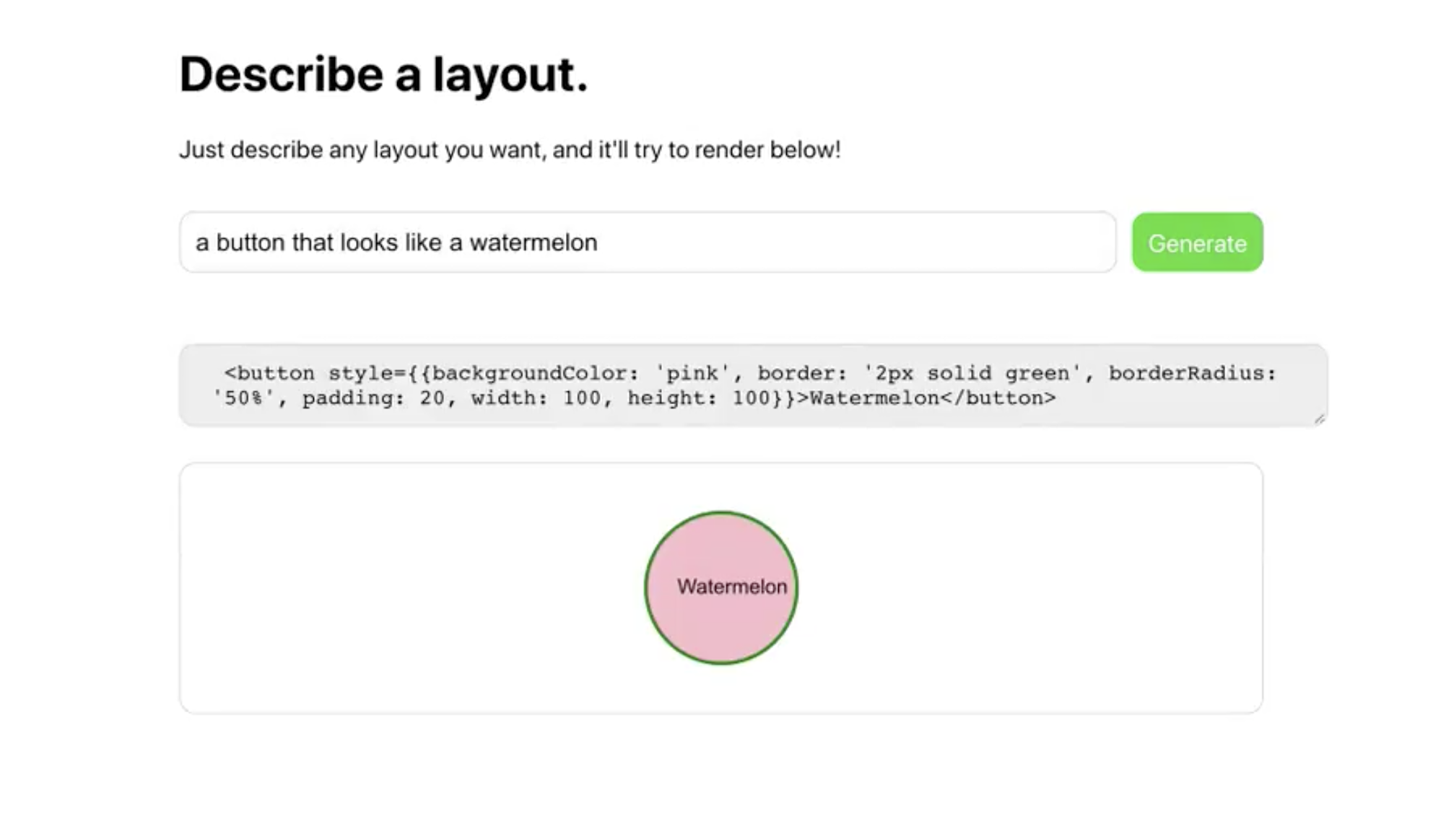

3. JSX Layout Maker#

This was one of the first examples of GPT-3 to generate code that caught the attention of the tweeple. It shows how to generate a JSX layout just by defining it in plain English.

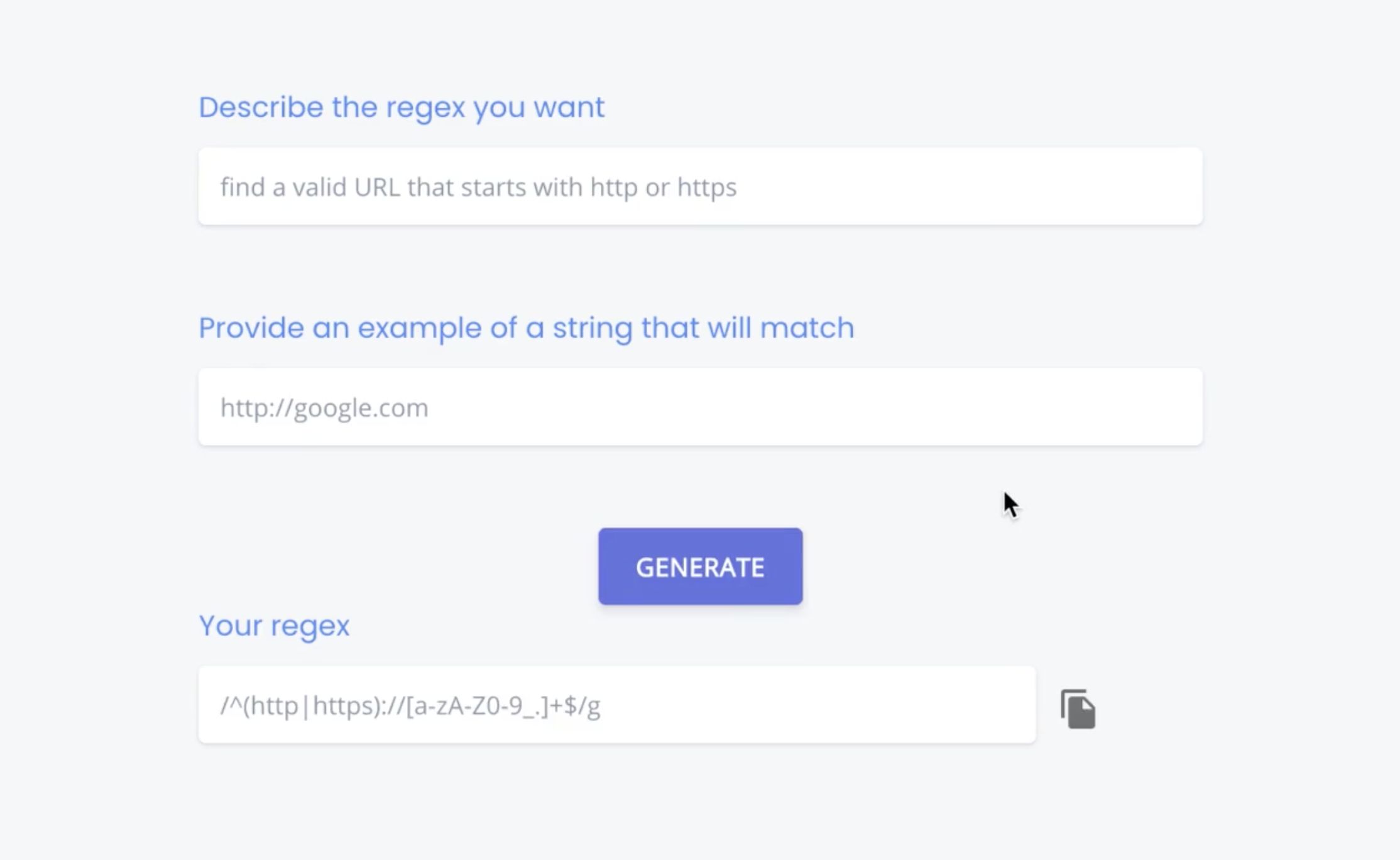

4. Regex Generator#

In this example, we observe that GPT-3 is also capable of generating Regex for different use-cases. You input the Regex you want in plain English, provide an example string that will match, and it generates the Regex in seconds.

See the original post

5. Website Mocker#

The brilliant combination of Figma and GPT-3 has once again proved to be game-changing. In this example GPT-3 is able to clone the website by receiving the URL of the website.

The developer inputs the following text:

“A website like stripe that is about a chat app”

And it outputs the follow web app:

See the original post

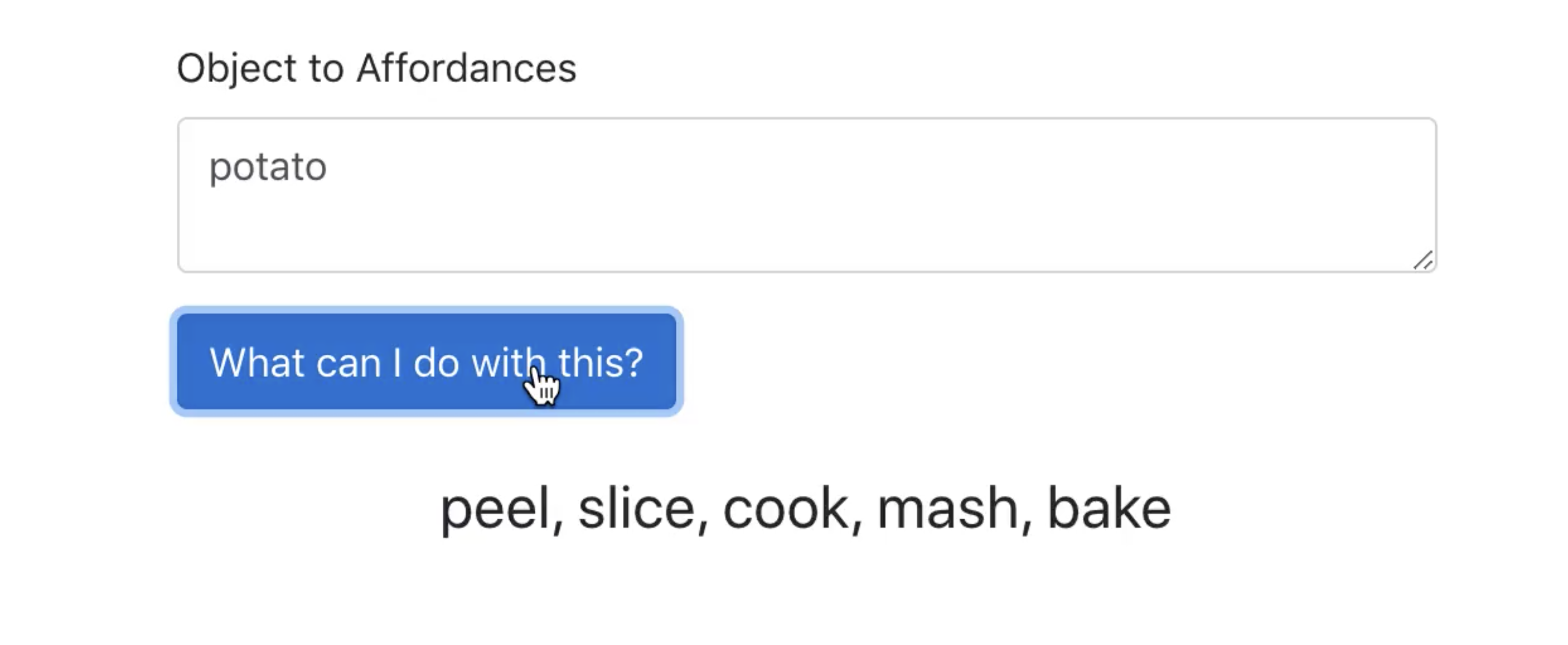

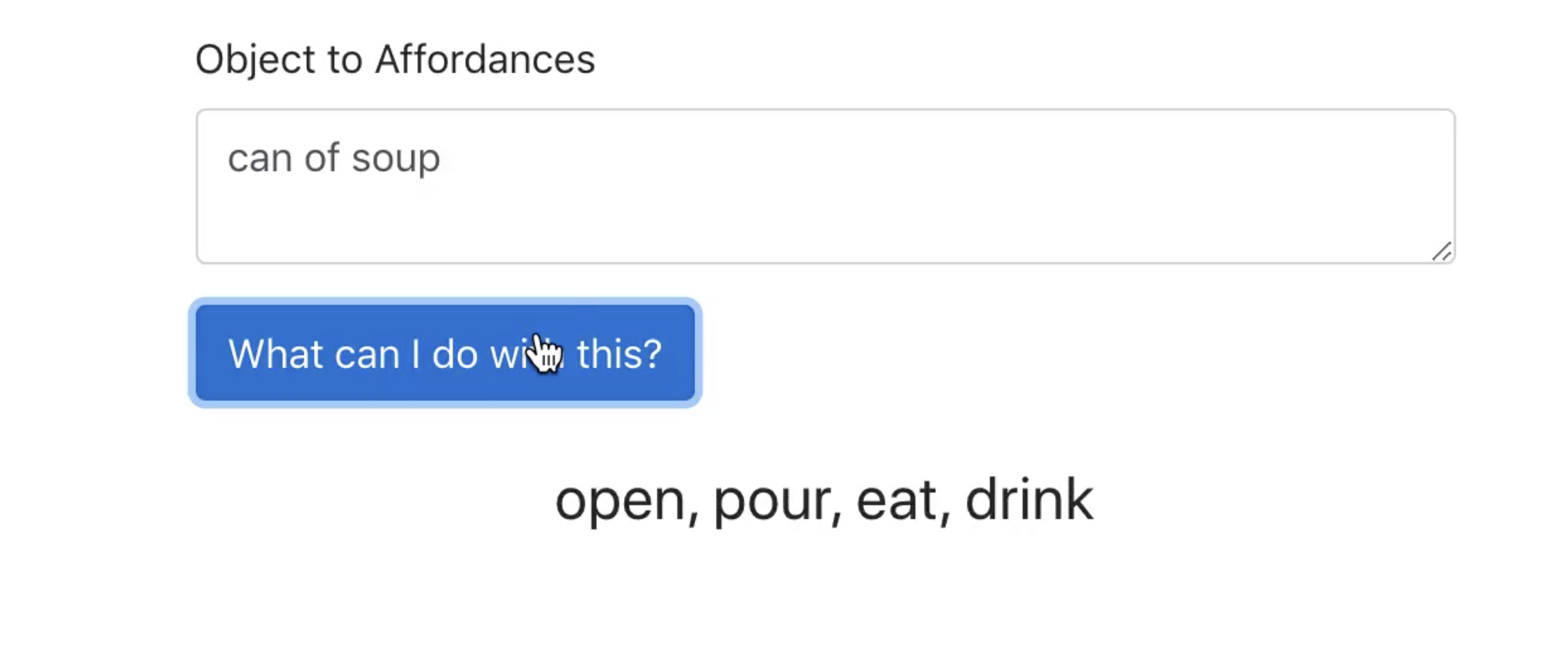

6. Object use-case generation#

Here, we get an interesting example where GPT-3 tell us what things can be done with an inputted object.

7. Autoplotter#

In this Tweet, we see that GPT-3 can also generate charts and plots from plain English. Here, it uses SVG charts to produce details charts.

See the original post

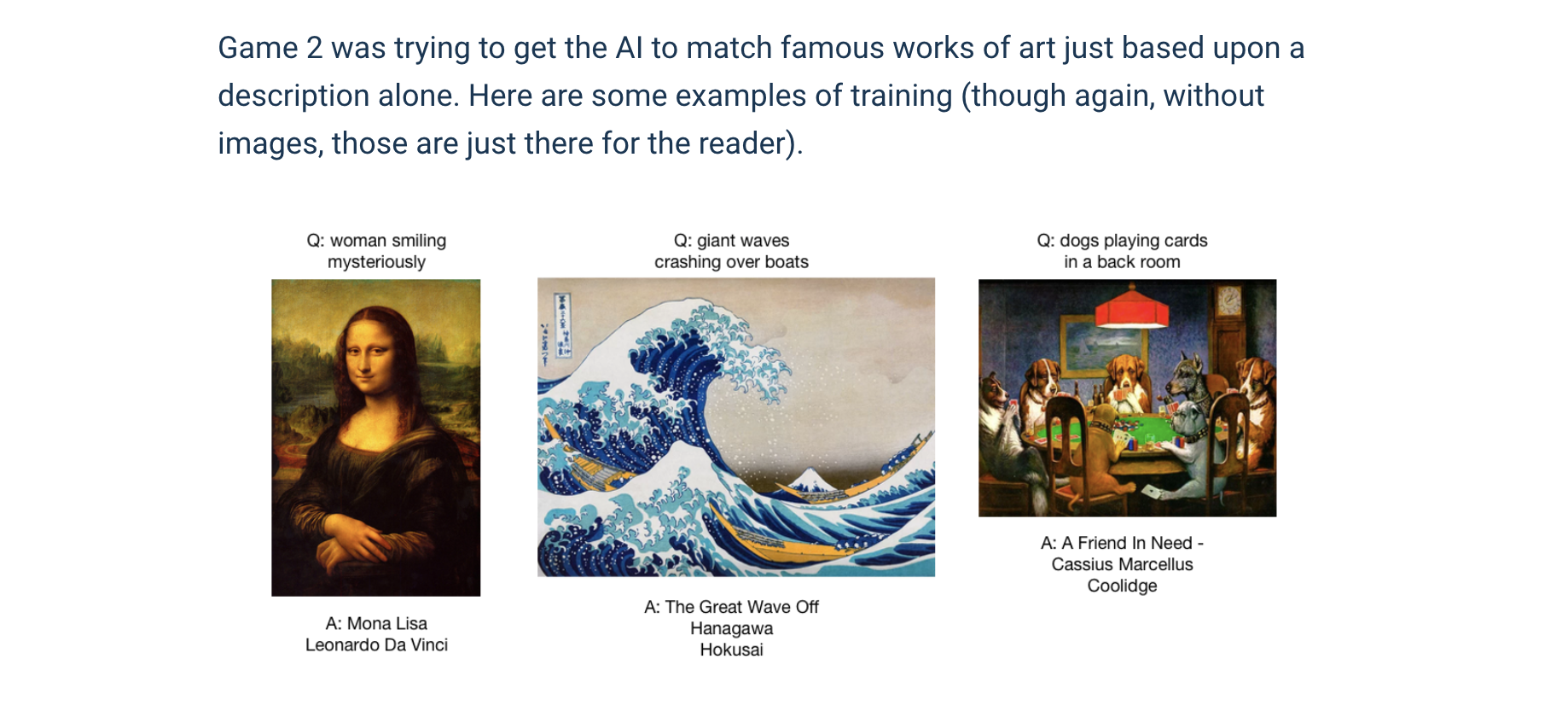

8. A complete evaluation#

This Tweet explains the basic use of GPT-3 text generation in many interesting ways. It shows that GPT-3 can play games like finding analogies, identifying paintings from naive descriptions, generating articles, and also recommending books. And it requires no training or massive data uploads.

See the original post

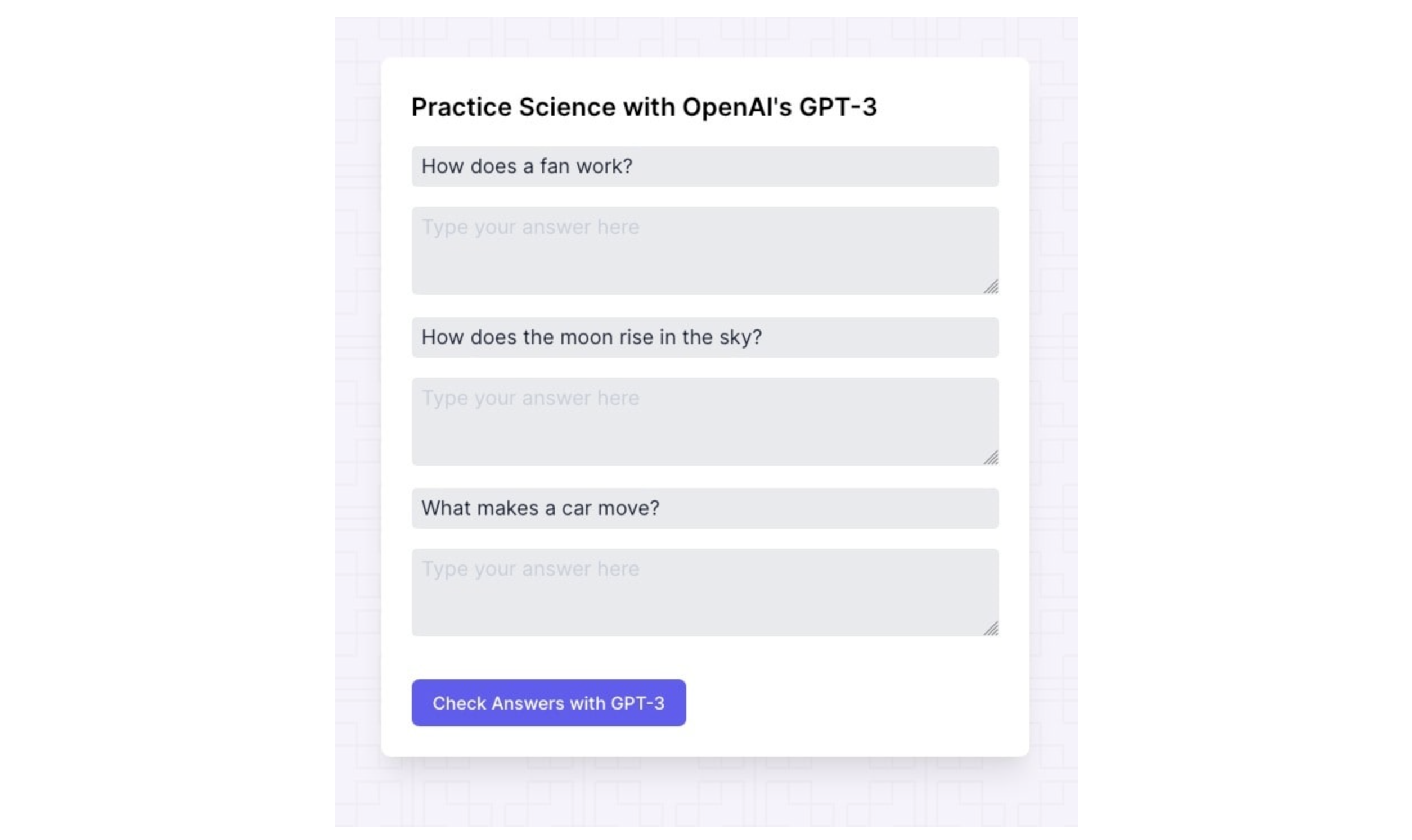

9. Quiz Producer#

Here is an awesome helper for teachers and students. It generates Quizzes for practice on any topics and also explains the answers to these questions in detail.

See the original post

10. Learn from Anyone#

As the name suggests, this tool helps you learn anything from anyone, whether it is Robotics from Elon Musk, Physics from Newton, Relativity Theory from Einstein, and Literature from Shakespeare.

See the original post

11. The Philosopher#

A lot has been discussed about the pros and cons of GPT-3. What happens when the AI itself turns into a philosopher and answers questions about the effects of GPT on humanity? Here is an example where the GPT-3 writes an essay explaining itself to humanity and how it will affect humankind.

See the original post

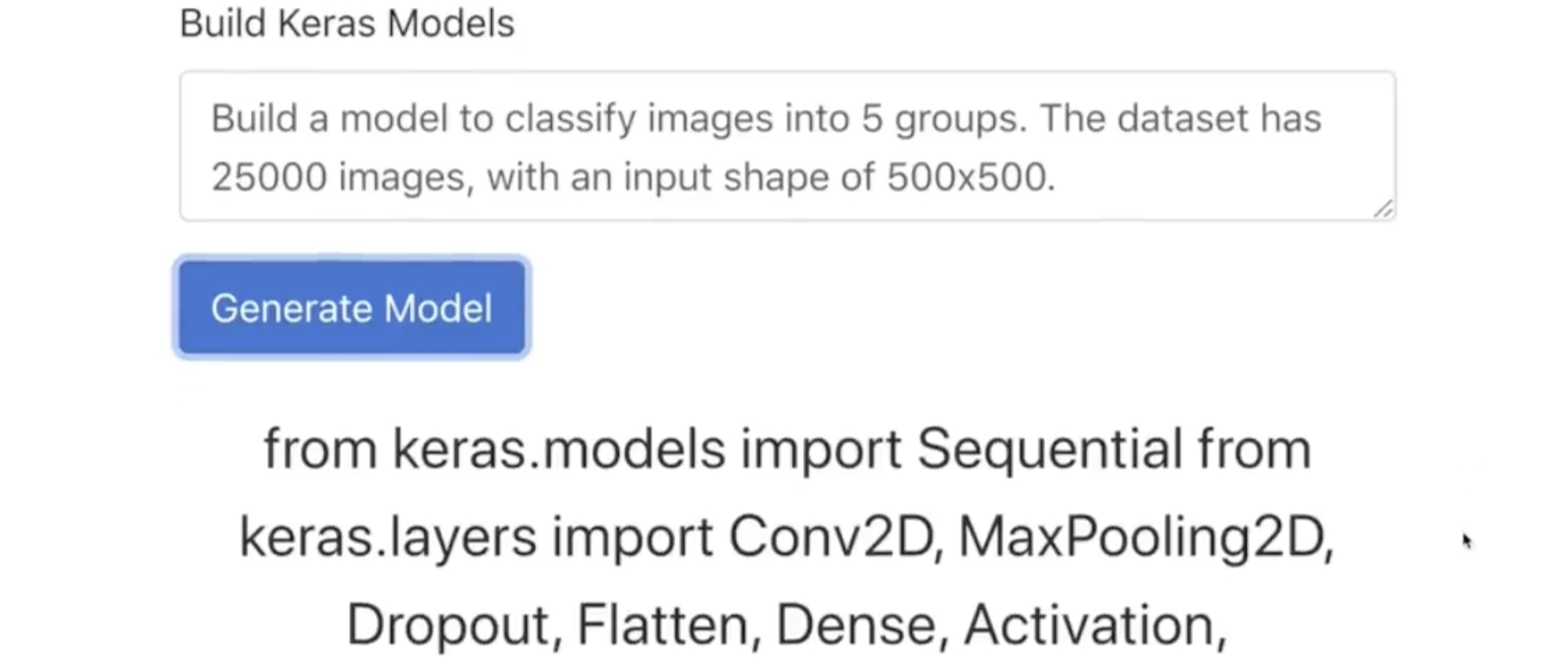

12. The AI recursion#

What will happen when Machine Learning models start writing Machine learning models? It sounds like the story of a Sci-fi movie, but it’s a reality now GPT-3 can write ML models for specific datasets and tasks. Here, the developer used GPT-3 to generate code for an ML model only by describing the dataset and required output.

See the original post

13. The Meme Maker#

Today in the world of social media, we see a lot of memes. GPT-3 can generate memes too. It can understand the nuances of meme-making and generate hilarious memes with a few prompts.

See the original post

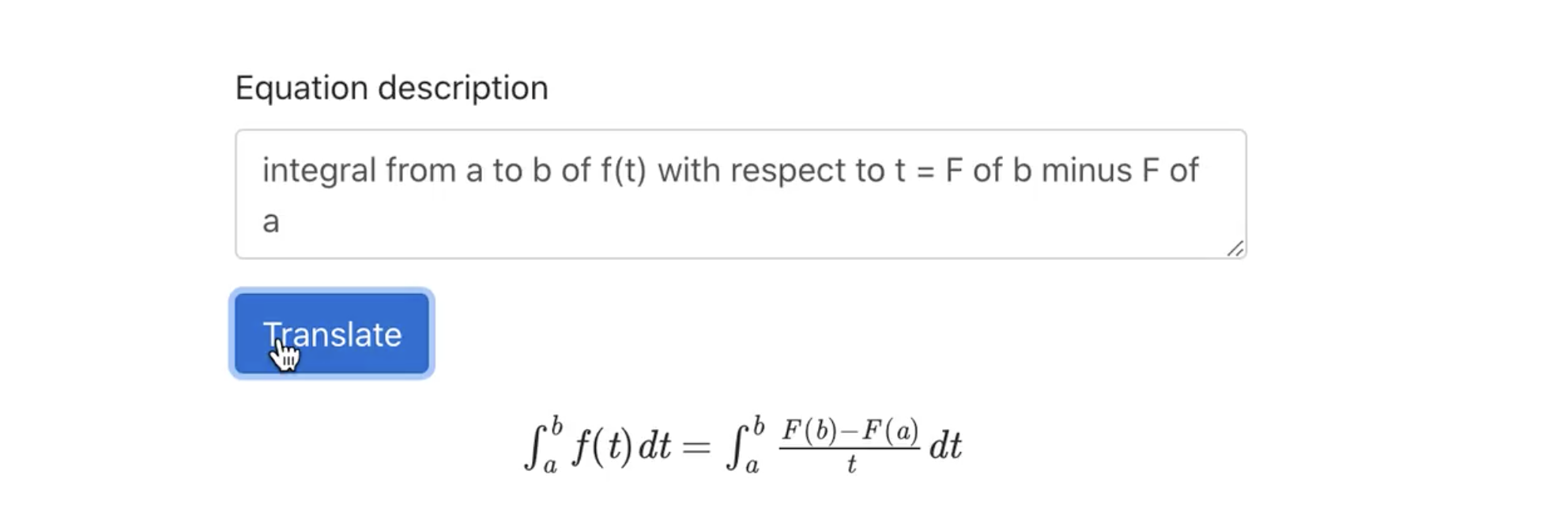

14. The LaTeX Fabricator#

LaTeX can be quite tiresome if we have to write complex equations, but GPT-3 comes to the rescue for this mundane task. Just provide an equation in plain English, and it will produce the LaTeX equations.

See the original post

15. The Animator#

GPT-3 can generate many things, one of them being an animator. It can generate frame-by-frame animations using the Figma plugin and a text prompt.

See the original post

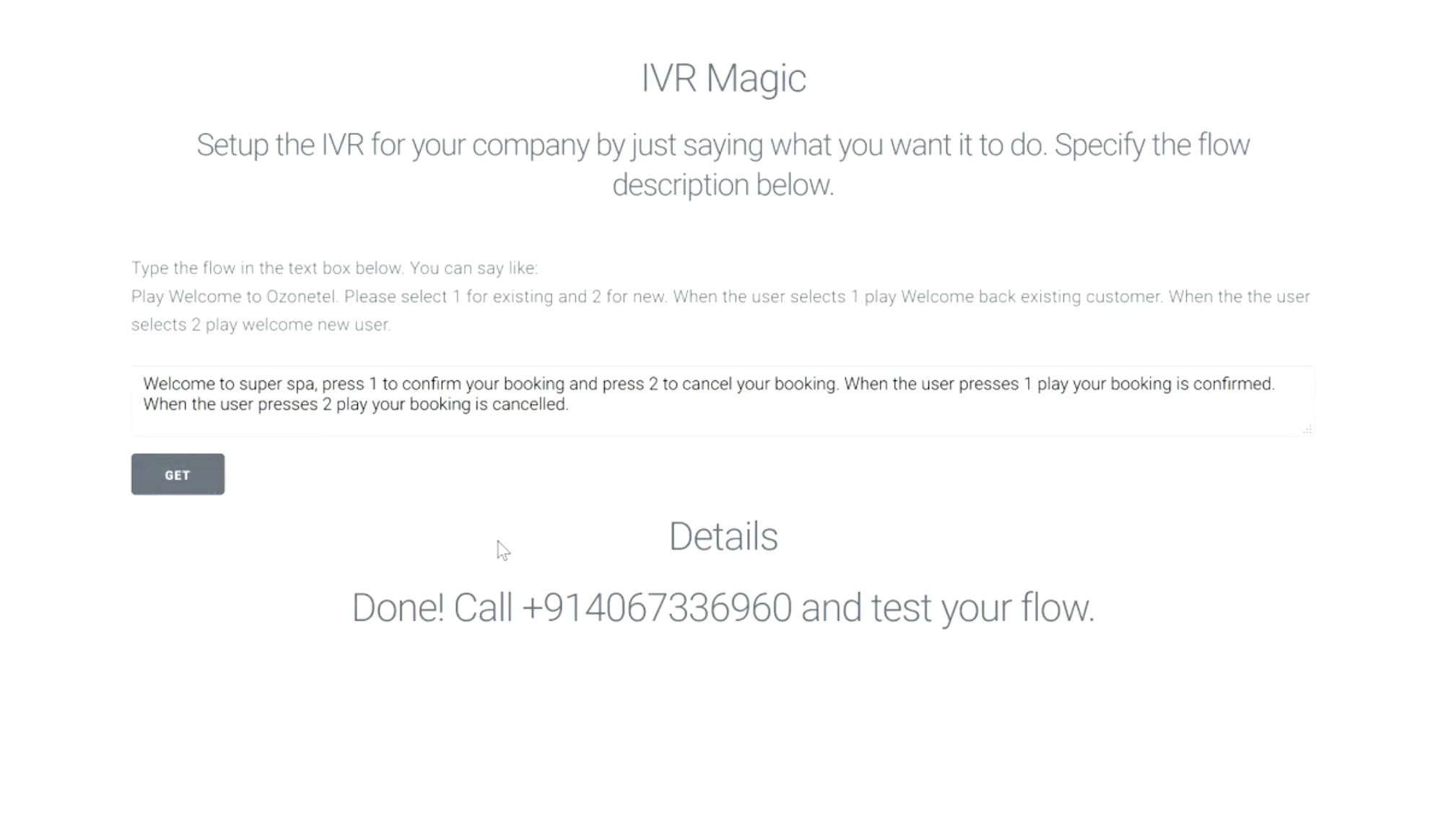

16. The Interactive Voice Response creator#

We must have dealt with IVR in our day-to-day life for booking just about any appointment. GPT-3 can generate IVR flow and generate it pretty fast.

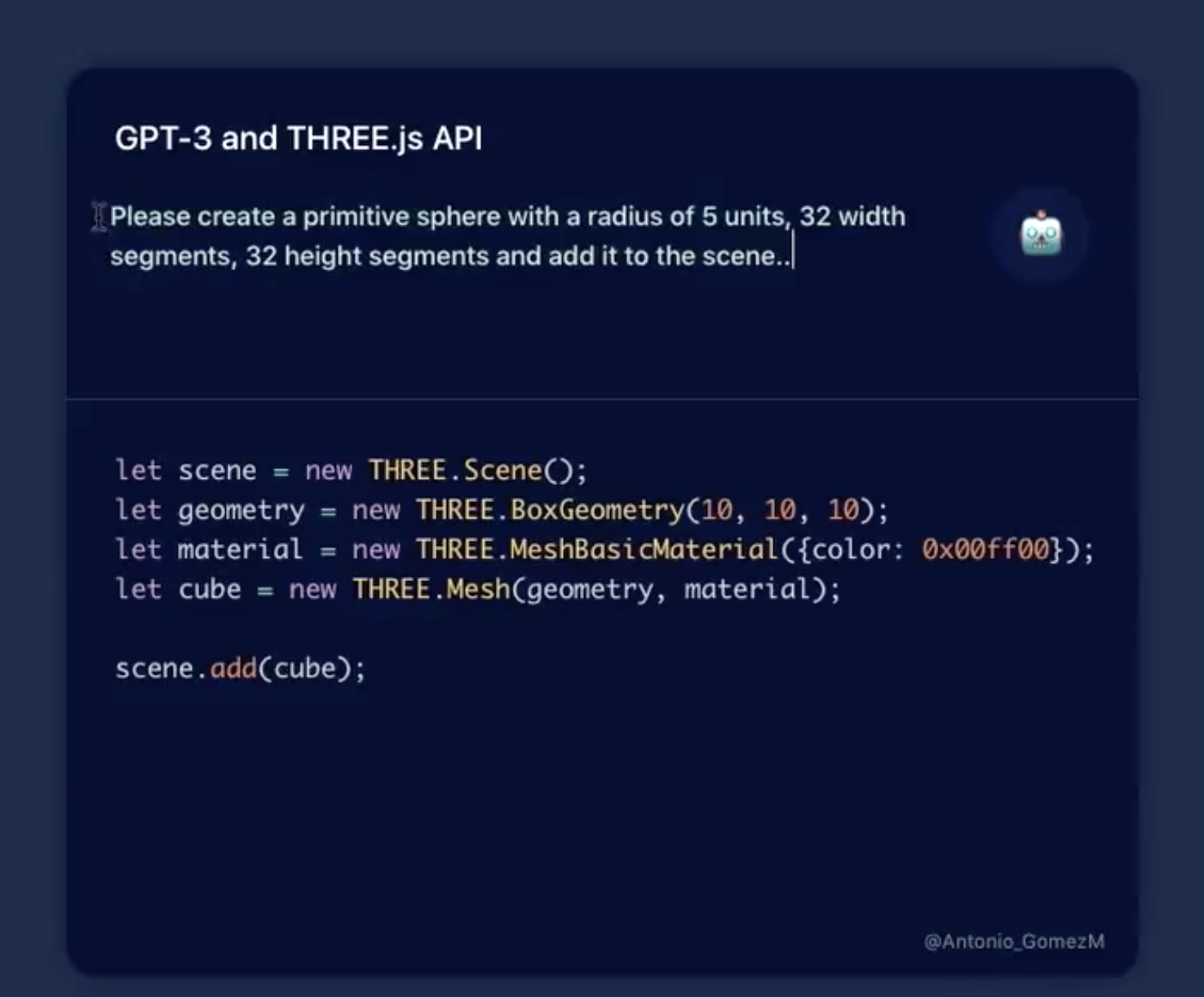

17. The 3D scene generator#

GPT-3 can generate 3D scenes using the three.js Javascript API. It generates code for the 3D scene if you describe the scene to it. Curently, it can generate just simple 3D objects.

See the original post

18. The Resume Creator#

How many times have we struggled with the task of creating an efficient and concise resume for our job interviews. GPT-3 can build resumes for us also. You have to write your description and it gives you concise points to add value to your resume.

See the original post

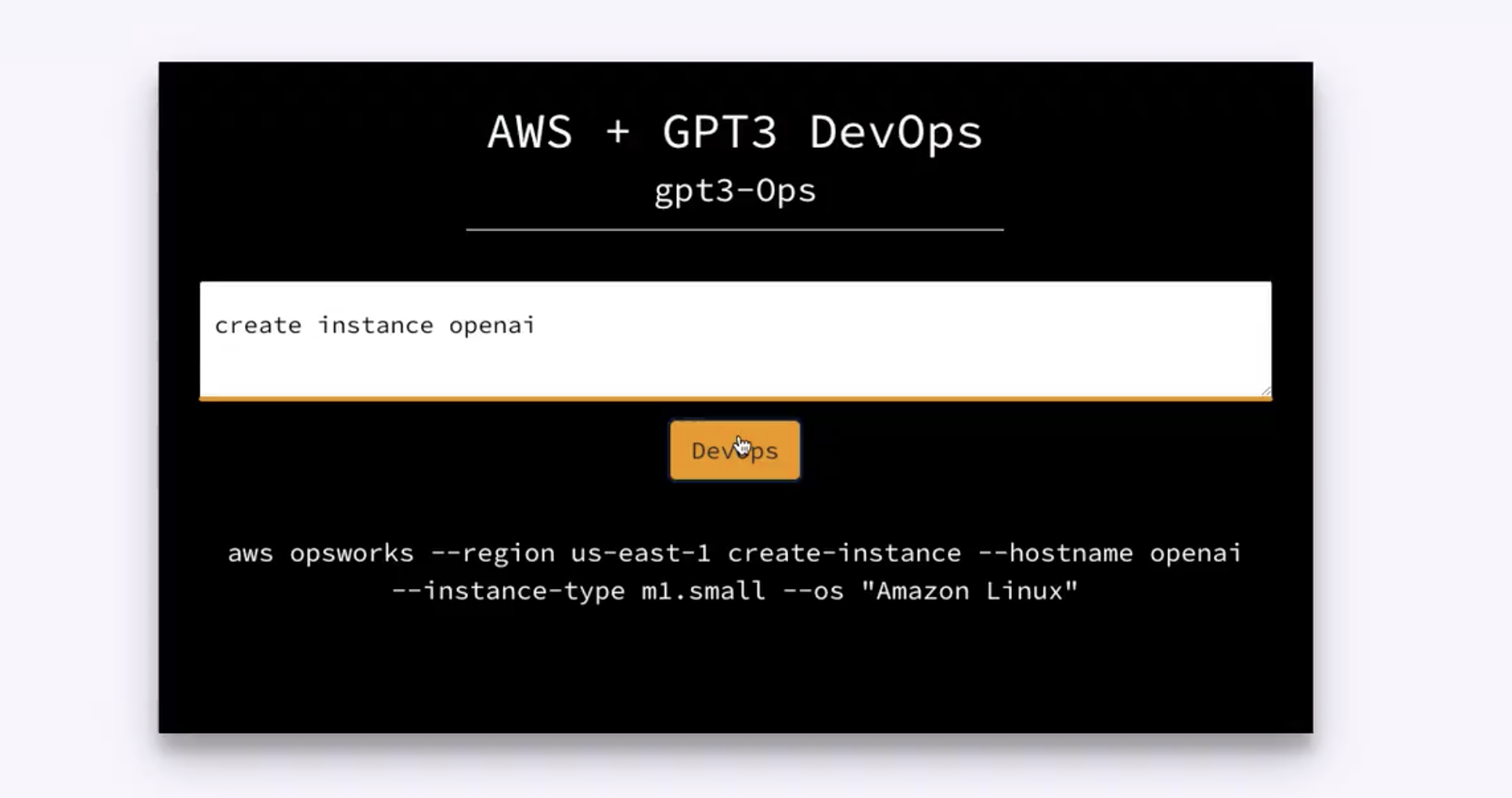

19. The DevOps engineer#

GPT-3 can not only write beautiful articles, create apps, and websites, but it can also help you with DevOps. It is like a multidisciplinary developer with a lot of abilities.

See the original post

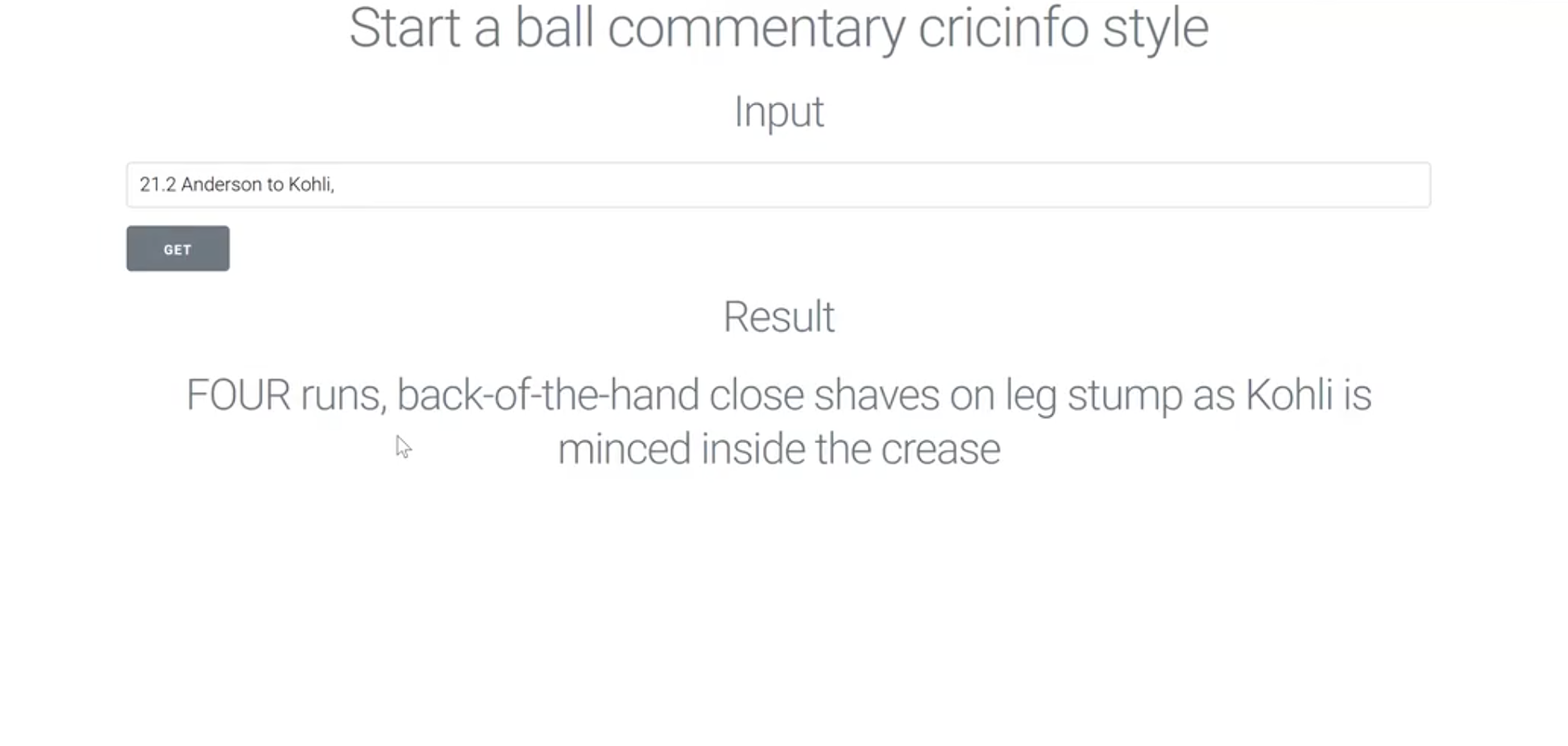

20. The Cricket commentator#

The job of sports commentators is also at risk by the advent of GPT-3. Sites like Cricbuzz and Cricinfo use GPT to generate textual cricket commentaries.

Beyond GPT-3: How the LLM landscape has evolved#

When GPT-3 was first released, it felt like the future had arrived. But in the years since, language models have evolved rapidly. Today, GPT-3.5 powers many everyday applications thanks to faster responses and lower costs, while GPT-4 delivers far more advanced reasoning, problem-solving, and multi-step planning capabilities.

Understanding these improvements is crucial. For example:

GPT-3.5 is ideal for tasks like chatbots, content generation, and lightweight automation.

GPT-4 excels at complex reasoning, structured decision-making, and handling nuanced language tasks.

There’s also a growing ecosystem of specialized GPT models — from code generation to legal document analysis — designed to solve specific problems with higher reliability.

Limitations and safety considerations you need to know#

Language models have become incredibly powerful, but they’re not magic. Here are a few real-world constraints every developer should keep in mind:

Hallucinations: Models can produce text that sounds plausible but is factually incorrect. Always validate results before using them in production.

Context limits: Each model has a maximum input length. For large documents or datasets, you’ll need to split text into chunks or use retrieval techniques.

Bias and safety: LLMs can reflect biases present in their training data. Use moderation filters and human-in-the-loop review for sensitive applications.

Cost and latency: API usage is priced per token, and complex tasks can get expensive quickly. Caching, batching, and careful prompt design help control costs.

Treat these limitations as design constraints, not deal-breakers — planning for them from the start leads to more robust applications.

Real-world use cases that matter today#

Early GPT-3 demos focused on creative experiments, but today’s most impactful applications solve real business problems. Here are some of the most valuable ways GPT-4 and its successors are being used:

Customer support: Automate first-line support with contextual, natural-sounding responses.

Document summarization: Distill long legal, medical, or financial documents into concise, actionable summaries.

Code generation and review: Assist developers with inline explanations, refactoring suggestions, and automated code writing.

Semantic search and Q&A: Build intelligent knowledge assistants that retrieve and synthesize answers from vast document sets.

Data extraction: Turn unstructured text into structured, machine-readable formats for downstream analytics.

These aren’t just interesting experiments — they’re production-ready solutions already delivering value across industries.

Modern development patterns for building with GPT#

Using GPT effectively today is about more than just writing a clever prompt. Modern applications rely on proven design patterns that make LLMs more reliable, accurate, and scalable:

Prompt engineering: Use techniques like few-shot prompting, chain-of-thought reasoning, or role prompts to guide the model’s thinking.

Retrieval-augmented generation (RAG): Combine GPT with a vector database to ground answers in external knowledge sources.

Tool use and function calling: Enable the model to call APIs, run calculations, or access databases when it needs external data.

Workflow orchestration: Break complex tasks into smaller steps and use multiple prompts or models together to solve them reliably.

These techniques turn GPT from a text generator into the “brain” of sophisticated, production-ready systems.

Wrapping up#

GPT-3 is amazing, and there are so many more things that it can be used to do. I hope this inspired you to look into GPT-3 and other deep learning technologies. Machine learning is our future, and the possibilities truly seem limitless. It’s important that we don’t fall behind or miss our on these fun, incredible tools.

If you want to learn more about machine learning and AI, check out Educative’s Adaptilab Machine Learning Engineer courses. These five courses are focused on giving you the practical skills to solve real-world ML problems and applications, rather than emphasizing complex theory.

By the time you’re done, you’ll have the ability to get hired as a Machine Learning developer.

Happy learning!

Continue reading about AI and Deep Learning#

- 6 examples of how artificial intelligence is used in the arts

- Understanding racial bias in machine learning algorithms

- Build a Deep Learning Text Generator Project with Markov Chains